@@ -208,7 +201,7 @@ AVAIL_LLM_MODELS = ["gpt-3.5-turbo", "api2d-gpt-3.5-turbo", "gpt-4", "api2d-gpt-

P.S. 如果需要依赖Latex的插件功能,请见Wiki。另外,您也可以直接使用方案4或者方案0获取Latex功能。

-2. ChatGPT + ChatGLM2 + MOSS + LLAMA2 + 通义千问(需要熟悉[Nvidia Docker](https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html#installing-on-ubuntu-and-debian)运行)

+2. ChatGPT + ChatGLM2 + MOSS + LLAMA2 + 通义千问(需要熟悉[Nvidia Docker](https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html#installing-on-ubuntu-and-debian)运行时)

[](https://github.com/binary-husky/gpt_academic/actions/workflows/build-with-chatglm.yml)

``` sh

@@ -254,7 +247,6 @@ P.S. 如果需要依赖Latex的插件功能,请见Wiki。另外,您也可以

### II:自定义函数插件

编写强大的函数插件来执行任何你想得到的和想不到的任务。

-

本项目的插件编写、调试难度很低,只要您具备一定的python基础知识,就可以仿照我们提供的模板实现自己的插件功能。

详情请参考[函数插件指南](https://github.com/binary-husky/gpt_academic/wiki/%E5%87%BD%E6%95%B0%E6%8F%92%E4%BB%B6%E6%8C%87%E5%8D%97)。

@@ -357,7 +349,7 @@ GPT Academic开发者QQ群:`610599535`

- 已知问题

- 某些浏览器翻译插件干扰此软件前端的运行

- - 官方Gradio目前有很多兼容性Bug,请**务必使用`requirement.txt`安装Gradio**

+ - 官方Gradio目前有很多兼容性问题,请**务必使用`requirement.txt`安装Gradio**

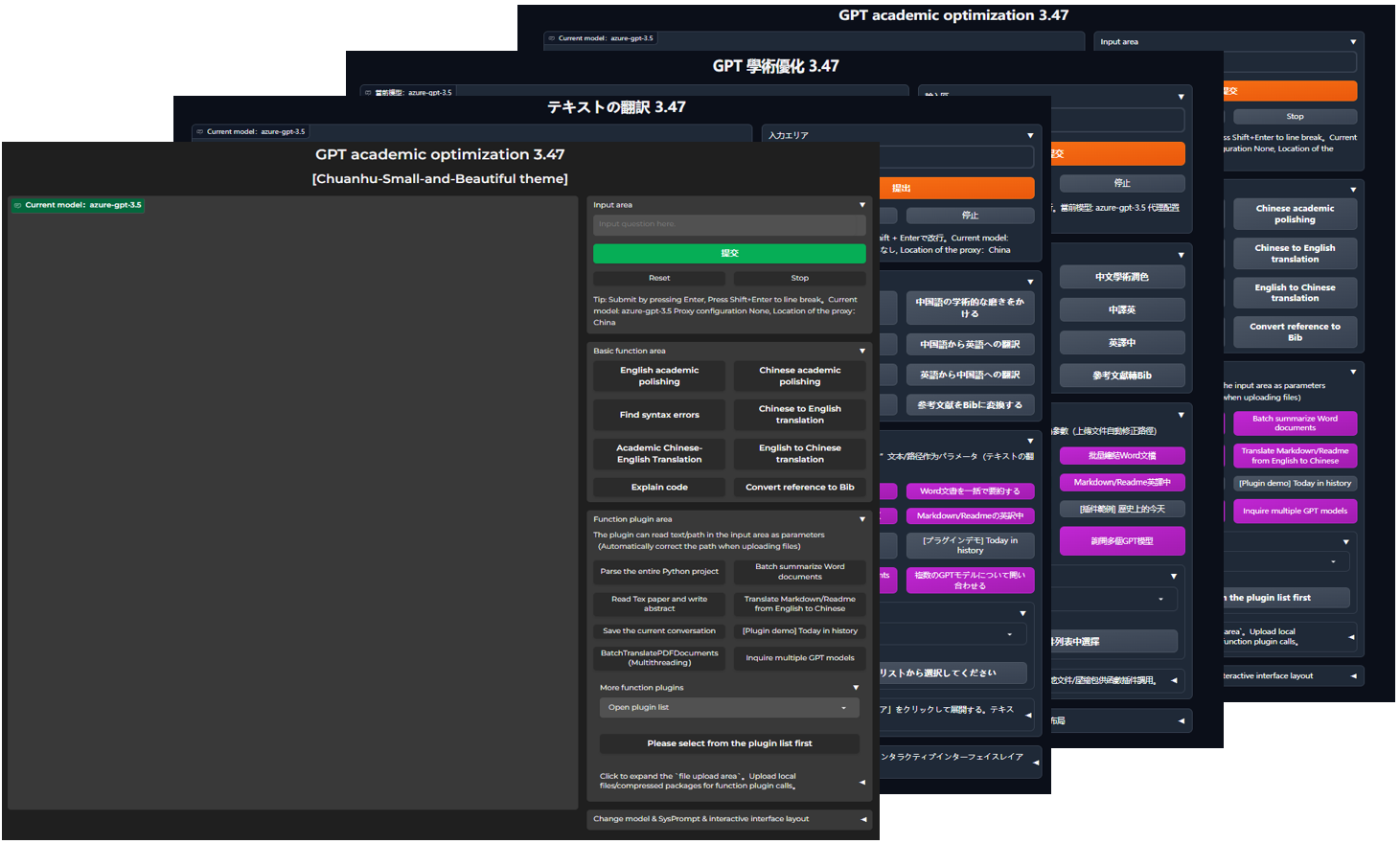

### III:主题

可以通过修改`THEME`选项(config.py)变更主题

diff --git a/version b/version

index 5f6de09c..cb4df5ae 100644

--- a/version

+++ b/version

@@ -1,5 +1,5 @@

{

- "version": 3.61,

+ "version": 3.62,

"show_feature": true,

- "new_feature": "修复潜在的多用户冲突问题 <-> 接入Deepseek Coder <-> AutoGen多智能体插件测试版 <-> 修复本地模型在Windows下的加载BUG <-> 支持文心一言v4和星火v3 <-> 支持GLM3和智谱的API <-> 解决本地模型并发BUG <-> 支持动态追加基础功能按钮"

+ "new_feature": "修复若干隐蔽的内存BUG <-> 修复多用户冲突问题 <-> 接入Deepseek Coder <-> AutoGen多智能体插件测试版 <-> 修复本地模型在Windows下的加载BUG <-> 支持文心一言v4和星火v3 <-> 支持GLM3和智谱的API <-> 解决本地模型并发BUG <-> 支持动态追加基础功能按钮"

}

From a64d5500450d0bad901f26e4493320d397fb9915 Mon Sep 17 00:00:00 2001

From: binary-husky

Date: Thu, 30 Nov 2023 23:23:54 +0800

Subject: [PATCH 16/32] =?UTF-8?q?=E4=BF=AE=E6=94=B9README=E4=B8=AD?=

=?UTF-8?q?=E7=9A=84=E4=B8=80=E4=BA=9B=E6=8D=A2=E8=A1=8C=E7=AC=A6?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

---

README.md | 19 ++++++++++---------

1 file changed, 10 insertions(+), 9 deletions(-)

diff --git a/README.md b/README.md

index 8102da8d..54bf7c1f 100644

--- a/README.md

+++ b/README.md

@@ -4,7 +4,7 @@

>

> 2023.11.7: 安装依赖时,请选择`requirements.txt`中**指定的版本**。 安装命令:`pip install -r requirements.txt`。本项目开源免费,近期发现有人蔑视开源协议并利用本项目违规圈钱,请提高警惕,谨防上当受骗。

-

+

@@ -13,14 +13,13 @@

[![Github][Github-image]][Github-url]

[![License][License-image]][License-url]

-

[![Releases][Releases-image]][Releases-url]

[![Installation][Installation-image]][Installation-url]

[![Wiki][Wiki-image]][Wiki-url]

[![PR][PRs-image]][PRs-url]

-[License-image]: https://img.shields.io/badge/LICENSE-GPL3.0-orange?&style=for-the-badge

-[Github-image]: https://img.shields.io/badge/github-12100E.svg?&style=for-the-badge&logo=github&logoColor=white

+[License-image]: https://img.shields.io/badge/LICENSE-GPL3.0-orange?&style=flat-square

+[Github-image]: https://img.shields.io/badge/github-12100E.svg?&style=flat-square

[Releases-image]: https://img.shields.io/badge/Releases-v3.6.0-blue?style=flat-square

[Installation-image]: https://img.shields.io/badge/Installation-v3.6.1-blue?style=flat-square

[Wiki-image]: https://img.shields.io/badge/wiki-项目文档-black?style=flat-square

@@ -35,14 +34,14 @@

+

**如果喜欢这个项目,请给它一个Star;如果您发明了好用的快捷键或插件,欢迎发pull requests!**

If you like this project, please give it a Star. Read this in [English](docs/README.English.md) | [日本語](docs/README.Japanese.md) | [한국어](docs/README.Korean.md) | [Русский](docs/README.Russian.md) | [Français](docs/README.French.md). All translations have been provided by the project itself. To translate this project to arbitrary language with GPT, read and run [`multi_language.py`](multi_language.py) (experimental).

-

+

+

-> **Note**

->

> 1.请注意只有 **高亮** 标识的插件(按钮)才支持读取文件,部分插件位于插件区的**下拉菜单**中。另外我们以**最高优先级**欢迎和处理任何新插件的PR。

>

> 2.本项目中每个文件的功能都在[自译解报告](https://github.com/binary-husky/gpt_academic/wiki/GPT‐Academic项目自译解报告)`self_analysis.md`详细说明。随着版本的迭代,您也可以随时自行点击相关函数插件,调用GPT重新生成项目的自我解析报告。常见问题请查阅wiki。

@@ -52,8 +51,6 @@ If you like this project, please give it a Star. Read this in [English](docs/REA

-# Features Overview

-

功能(⭐= 近期新增功能) | 描述

@@ -118,6 +115,8 @@ Latex论文一键校对 | [插件] 仿Grammarly对Latex文章进行语法、拼

+

# Installation

### 安装方法I:直接运行 (Windows, Linux or MacOS)

@@ -224,6 +223,7 @@ P.S. 如果需要依赖Latex的插件功能,请见Wiki。另外,您也可以

- 使用WSL2(Windows Subsystem for Linux 子系统)。请访问[部署wiki-2](https://github.com/binary-husky/gpt_academic/wiki/%E4%BD%BF%E7%94%A8WSL2%EF%BC%88Windows-Subsystem-for-Linux-%E5%AD%90%E7%B3%BB%E7%BB%9F%EF%BC%89%E9%83%A8%E7%BD%B2)

- 如何在二级网址(如`http://localhost/subpath`)下运行。请访问[FastAPI运行说明](docs/WithFastapi.md)

+

# Advanced Usage

### I:自定义新的便捷按钮(学术快捷键)

@@ -250,6 +250,7 @@ P.S. 如果需要依赖Latex的插件功能,请见Wiki。另外,您也可以

本项目的插件编写、调试难度很低,只要您具备一定的python基础知识,就可以仿照我们提供的模板实现自己的插件功能。

详情请参考[函数插件指南](https://github.com/binary-husky/gpt_academic/wiki/%E5%87%BD%E6%95%B0%E6%8F%92%E4%BB%B6%E6%8C%87%E5%8D%97)。

+

# Updates

### I:动态

From d8958da8cd0153a717a6585b3faf1d72bd6803ad Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Fri, 1 Dec 2023 09:28:22 +0800

Subject: [PATCH 17/32] =?UTF-8?q?=E4=BF=AE=E6=94=B9Typo?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

---

crazy_functional.py | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/crazy_functional.py b/crazy_functional.py

index 3d4df718..3b8b9453 100644

--- a/crazy_functional.py

+++ b/crazy_functional.py

@@ -489,7 +489,7 @@ def get_crazy_functions():

})

from crazy_functions.Latex输出PDF结果 import Latex翻译中文并重新编译PDF

function_plugins.update({

- "Arixv论文精细翻译(输入arxivID)[需Latex]": {

+ "Arxiv论文精细翻译(输入arxivID)[需Latex]": {

"Group": "学术",

"Color": "stop",

"AsButton": False,

From 3d6ee5c755a1506489c2fb031aa9cdc275d2a737 Mon Sep 17 00:00:00 2001

From: Skyzayre <120616113+Skyzayre@users.noreply.github.com>

Date: Fri, 1 Dec 2023 09:29:45 +0800

Subject: [PATCH 18/32] =?UTF-8?q?=E8=BD=AC=E5=8C=96README=E5=BE=BD?=

=?UTF-8?q?=E7=AB=A0=E4=B8=BA=E5=8A=A8=E6=80=81=E5=BE=BD=E7=AB=A0?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

将license、version、realease徽章都转化为动态徽章,减少README维护成本

---

README.md | 16 +++++++++-------

1 file changed, 9 insertions(+), 7 deletions(-)

diff --git a/README.md b/README.md

index 54bf7c1f..c0e0a836 100644

--- a/README.md

+++ b/README.md

@@ -18,15 +18,15 @@

[![Wiki][Wiki-image]][Wiki-url]

[![PR][PRs-image]][PRs-url]

-[License-image]: https://img.shields.io/badge/LICENSE-GPL3.0-orange?&style=flat-square

-[Github-image]: https://img.shields.io/badge/github-12100E.svg?&style=flat-square

-[Releases-image]: https://img.shields.io/badge/Releases-v3.6.0-blue?style=flat-square

-[Installation-image]: https://img.shields.io/badge/Installation-v3.6.1-blue?style=flat-square

+[Github-image]: https://img.shields.io/badge/github-12100E.svg?style=flat-square

+[License-image]: https://img.shields.io/github/license/binary-husky/gpt_academic?label=License&style=flat-square&color=orange

+[Releases-image]: https://img.shields.io/github/release/binary-husky/gpt_academic?label=Release&style=flat-square&color=blue

+[Installation-image]: https://img.shields.io/badge/dynamic/json?color=blue&url=https://raw.githubusercontent.com/binary-husky/gpt_academic/master/version&query=$.version&label=Installation&style=flat-square

[Wiki-image]: https://img.shields.io/badge/wiki-项目文档-black?style=flat-square

[PRs-image]: https://img.shields.io/badge/PRs-welcome-pink?style=flat-square

-[License-url]: https://github.com/binary-husky/gpt_academic/blob/master/LICENSE

[Github-url]: https://github.com/binary-husky/gpt_academic

+[License-url]: https://github.com/binary-husky/gpt_academic/blob/master/LICENSE

[Releases-url]: https://github.com/binary-husky/gpt_academic/releases

[Installation-url]: https://github.com/binary-husky/gpt_academic#installation

[Wiki-url]: https://github.com/binary-husky/gpt_academic/wiki

@@ -38,7 +38,9 @@

**如果喜欢这个项目,请给它一个Star;如果您发明了好用的快捷键或插件,欢迎发pull requests!**

-If you like this project, please give it a Star. Read this in [English](docs/README.English.md) | [日本語](docs/README.Japanese.md) | [한국어](docs/README.Korean.md) | [Русский](docs/README.Russian.md) | [Français](docs/README.French.md). All translations have been provided by the project itself. To translate this project to arbitrary language with GPT, read and run [`multi_language.py`](multi_language.py) (experimental).

+If you like this project, please give it a Star.

+

+Read this in [English](docs/README.English.md) | [日本語](docs/README.Japanese.md) | [한국어](docs/README.Korean.md) | [Русский](docs/README.Russian.md) | [Français](docs/README.French.md). All translations have been provided by the project itself. To translate this project to arbitrary language with GPT, read and run [`multi_language.py`](multi_language.py) (experimental).

@@ -47,7 +49,7 @@ If you like this project, please give it a Star. Read this in [English](docs/REA

> 2.本项目中每个文件的功能都在[自译解报告](https://github.com/binary-husky/gpt_academic/wiki/GPT‐Academic项目自译解报告)`self_analysis.md`详细说明。随着版本的迭代,您也可以随时自行点击相关函数插件,调用GPT重新生成项目的自我解析报告。常见问题请查阅wiki。

> [](#installation) [](https://github.com/binary-husky/gpt_academic/releases) [](https://github.com/binary-husky/gpt_academic/wiki/项目配置说明) []([https://github.com/binary-husky/gpt_academic/wiki/项目配置说明](https://github.com/binary-husky/gpt_academic/wiki))

>

-> 3.本项目兼容并鼓励尝试国产大语言模型ChatGLM等。支持多个api-key共存,可在配置文件中填写如`API_KEY="openai-key1,openai-key2,azure-key3,api2d-key4"`。需要临时更换`API_KEY`时,在输入区输入临时的`API_KEY`然后回车键提交后即可生效。

+> 3.本项目兼容并鼓励尝试国产大语言模型ChatGLM等。支持多个api-key共存,可在配置文件中填写如`API_KEY="openai-key1,openai-key2,azure-key3,api2d-key4"`。需要临时更换`API_KEY`时,在输入区输入临时的`API_KEY`然后回车键提交即可生效。

From e7f4c804eb5bf6a08a0b91eda74c4896cc8f0ab9 Mon Sep 17 00:00:00 2001

From: Skyzayre <120616113+Skyzayre@users.noreply.github.com>

Date: Fri, 1 Dec 2023 10:27:25 +0800

Subject: [PATCH 19/32] =?UTF-8?q?=E4=BF=AE=E6=94=B9=E6=8F=92=E4=BB=B6?=

=?UTF-8?q?=E5=88=86=E7=B1=BB=E5=90=8D=E7=A7=B0?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

将原有分类 “对话” 更名为 “对话&作图”

---

config.py | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/config.py b/config.py

index f170a2bb..f1d27289 100644

--- a/config.py

+++ b/config.py

@@ -82,7 +82,7 @@ MAX_RETRY = 2

# 插件分类默认选项

-DEFAULT_FN_GROUPS = ['对话', '编程', '学术', '智能体']

+DEFAULT_FN_GROUPS = ['对话&作图', '编程', '学术', '智能体']

# 模型选择是 (注意: LLM_MODEL是默认选中的模型, 它*必须*被包含在AVAIL_LLM_MODELS列表中 )

From e8dd3c02f2f22d72cadce87b86c9cbab73e8f488 Mon Sep 17 00:00:00 2001

From: Skyzayre <120616113+Skyzayre@users.noreply.github.com>

Date: Fri, 1 Dec 2023 10:30:25 +0800

Subject: [PATCH 20/32] =?UTF-8?q?=E4=BF=AE=E6=94=B9=E6=8F=92=E4=BB=B6?=

=?UTF-8?q?=E5=AF=B9=E5=BA=94=E7=9A=84=E5=88=86=E7=B1=BB?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

---

crazy_functional.py | 38 +++++++++++++++++++-------------------

1 file changed, 19 insertions(+), 19 deletions(-)

diff --git a/crazy_functional.py b/crazy_functional.py

index 3b8b9453..8e786e6d 100644

--- a/crazy_functional.py

+++ b/crazy_functional.py

@@ -40,7 +40,7 @@ def get_crazy_functions():

function_plugins = {

"虚空终端": {

- "Group": "对话|编程|学术|智能体",

+ "Group": "对话&作图|编程|学术|智能体",

"Color": "stop",

"AsButton": True,

"Function": HotReload(虚空终端)

@@ -53,20 +53,20 @@ def get_crazy_functions():

"Function": HotReload(解析一个Python项目)

},

"载入对话历史存档(先上传存档或输入路径)": {

- "Group": "对话",

+ "Group": "对话&作图",

"Color": "stop",

"AsButton": False,

"Info": "载入对话历史存档 | 输入参数为路径",

"Function": HotReload(载入对话历史存档)

},

"删除所有本地对话历史记录(谨慎操作)": {

- "Group": "对话",

+ "Group": "对话&作图",

"AsButton": False,

"Info": "删除所有本地对话历史记录,谨慎操作 | 不需要输入参数",

"Function": HotReload(删除所有本地对话历史记录)

},

"清除所有缓存文件(谨慎操作)": {

- "Group": "对话",

+ "Group": "对话&作图",

"Color": "stop",

"AsButton": False, # 加入下拉菜单中

"Info": "清除所有缓存文件,谨慎操作 | 不需要输入参数",

@@ -180,19 +180,19 @@ def get_crazy_functions():

"Function": HotReload(批量生成函数注释)

},

"保存当前的对话": {

- "Group": "对话",

+ "Group": "对话&作图",

"AsButton": True,

"Info": "保存当前的对话 | 不需要输入参数",

"Function": HotReload(对话历史存档)

},

"[多线程Demo]解析此项目本身(源码自译解)": {

- "Group": "对话|编程",

+ "Group": "对话&作图|编程",

"AsButton": False, # 加入下拉菜单中

"Info": "多线程解析并翻译此项目的源码 | 不需要输入参数",

"Function": HotReload(解析项目本身)

},

"历史上的今天": {

- "Group": "对话",

+ "Group": "对话&作图",

"AsButton": True,

"Info": "查看历史上的今天事件 (这是一个面向开发者的插件Demo) | 不需要输入参数",

"Function": HotReload(高阶功能模板函数)

@@ -205,7 +205,7 @@ def get_crazy_functions():

"Function": HotReload(批量翻译PDF文档)

},

"询问多个GPT模型": {

- "Group": "对话",

+ "Group": "对话&作图",

"Color": "stop",

"AsButton": True,

"Function": HotReload(同时问询)

@@ -300,7 +300,7 @@ def get_crazy_functions():

from crazy_functions.联网的ChatGPT import 连接网络回答问题

function_plugins.update({

"连接网络回答问题(输入问题后点击该插件,需要访问谷歌)": {

- "Group": "对话",

+ "Group": "对话&作图",

"Color": "stop",

"AsButton": False, # 加入下拉菜单中

# "Info": "连接网络回答问题(需要访问谷歌)| 输入参数是一个问题",

@@ -310,7 +310,7 @@ def get_crazy_functions():

from crazy_functions.联网的ChatGPT_bing版 import 连接bing搜索回答问题

function_plugins.update({

"连接网络回答问题(中文Bing版,输入问题后点击该插件)": {

- "Group": "对话",

+ "Group": "对话&作图",

"Color": "stop",

"AsButton": False, # 加入下拉菜单中

"Info": "连接网络回答问题(需要访问中文Bing)| 输入参数是一个问题",

@@ -341,7 +341,7 @@ def get_crazy_functions():

from crazy_functions.询问多个大语言模型 import 同时问询_指定模型

function_plugins.update({

"询问多个GPT模型(手动指定询问哪些模型)": {

- "Group": "对话",

+ "Group": "对话&作图",

"Color": "stop",

"AsButton": False,

"AdvancedArgs": True, # 调用时,唤起高级参数输入区(默认False)

@@ -357,7 +357,7 @@ def get_crazy_functions():

from crazy_functions.图片生成 import 图片生成_DALLE2, 图片生成_DALLE3

function_plugins.update({

"图片生成_DALLE2 (先切换模型到openai或api2d)": {

- "Group": "对话",

+ "Group": "对话&作图",

"Color": "stop",

"AsButton": False,

"AdvancedArgs": True, # 调用时,唤起高级参数输入区(默认False)

@@ -368,7 +368,7 @@ def get_crazy_functions():

})

function_plugins.update({

"图片生成_DALLE3 (先切换模型到openai或api2d)": {

- "Group": "对话",

+ "Group": "对话&作图",

"Color": "stop",

"AsButton": False,

"AdvancedArgs": True, # 调用时,唤起高级参数输入区(默认False)

@@ -385,7 +385,7 @@ def get_crazy_functions():

from crazy_functions.总结音视频 import 总结音视频

function_plugins.update({

"批量总结音视频(输入路径或上传压缩包)": {

- "Group": "对话",

+ "Group": "对话&作图",

"Color": "stop",

"AsButton": False,

"AdvancedArgs": True,

@@ -402,7 +402,7 @@ def get_crazy_functions():

from crazy_functions.数学动画生成manim import 动画生成

function_plugins.update({

"数学动画生成(Manim)": {

- "Group": "对话",

+ "Group": "对话&作图",

"Color": "stop",

"AsButton": False,

"Info": "按照自然语言描述生成一个动画 | 输入参数是一段话",

@@ -433,7 +433,7 @@ def get_crazy_functions():

from crazy_functions.Langchain知识库 import 知识库问答

function_plugins.update({

"构建知识库(先上传文件素材,再运行此插件)": {

- "Group": "对话",

+ "Group": "对话&作图",

"Color": "stop",

"AsButton": False,

"AdvancedArgs": True,

@@ -449,7 +449,7 @@ def get_crazy_functions():

from crazy_functions.Langchain知识库 import 读取知识库作答

function_plugins.update({

"知识库问答(构建知识库后,再运行此插件)": {

- "Group": "对话",

+ "Group": "对话&作图",

"Color": "stop",

"AsButton": False,

"AdvancedArgs": True,

@@ -465,7 +465,7 @@ def get_crazy_functions():

from crazy_functions.交互功能函数模板 import 交互功能模板函数

function_plugins.update({

"交互功能模板Demo函数(查找wallhaven.cc的壁纸)": {

- "Group": "对话",

+ "Group": "对话&作图",

"Color": "stop",

"AsButton": False,

"Function": HotReload(交互功能模板函数)

@@ -527,7 +527,7 @@ def get_crazy_functions():

from crazy_functions.语音助手 import 语音助手

function_plugins.update({

"实时语音对话": {

- "Group": "对话",

+ "Group": "对话&作图",

"Color": "stop",

"AsButton": True,

"Info": "这是一个时刻聆听着的语音对话助手 | 没有输入参数",

From ef12d4f754bd955431063d14963f25709c174f20 Mon Sep 17 00:00:00 2001

From: Skyzayre <120616113+Skyzayre@users.noreply.github.com>

Date: Fri, 1 Dec 2023 10:31:50 +0800

Subject: [PATCH 21/32] =?UTF-8?q?=E4=BF=AE=E6=94=B9dalle3=E5=8F=82?=

=?UTF-8?q?=E6=95=B0=E8=BE=93=E5=85=A5=E5=8C=BA=E6=8F=90=E7=A4=BA=E8=AF=AD?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

---

crazy_functional.py | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/crazy_functional.py b/crazy_functional.py

index 8e786e6d..dcf7f6b8 100644

--- a/crazy_functional.py

+++ b/crazy_functional.py

@@ -372,7 +372,7 @@ def get_crazy_functions():

"Color": "stop",

"AsButton": False,

"AdvancedArgs": True, # 调用时,唤起高级参数输入区(默认False)

- "ArgsReminder": "在这里输入分辨率, 如1024x1024(默认),支持 1024x1024, 1792x1024, 1024x1792。如需生成高清图像,请输入 1024x1024-HD, 1792x1024-HD, 1024x1792-HD。", # 高级参数输入区的显示提示

+ "ArgsReminder": "在这里输入自定义参数“分辨率-质量(可选)-风格(可选)”, 参数示例“1024x1024-hd-vivid” || 分辨率支持 1024x1024(默认)//1792x1024//1024x1792 || 质量支持 -standard(默认)//-hd || 风格支持 -vivid(默认)//-natural", # 高级参数输入区的显示提示

"Info": "使用DALLE3生成图片 | 输入参数字符串,提供图像的内容",

"Function": HotReload(图片生成_DALLE3)

},

From 6126024f2c94e6e56accd013736d4c0427e9596e Mon Sep 17 00:00:00 2001

From: Skyzayre <120616113+Skyzayre@users.noreply.github.com>

Date: Fri, 1 Dec 2023 10:36:59 +0800

Subject: [PATCH 22/32] =?UTF-8?q?dall-e-3=E6=B7=BB=E5=8A=A0=20'style'=20?=

=?UTF-8?q?=E9=A3=8E=E6=A0=BC=E5=8F=82=E6=95=B0?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

dall-e-3添加 'style' 风格参数(参考 platform.openai.com/doc/api-reference),修改dall-e-3作图时的参数判断逻辑

---

crazy_functions/图片生成.py | 27 +++++++++++++++++----------

1 file changed, 17 insertions(+), 10 deletions(-)

diff --git a/crazy_functions/图片生成.py b/crazy_functions/图片生成.py

index 642a9e22..104d4034 100644

--- a/crazy_functions/图片生成.py

+++ b/crazy_functions/图片生成.py

@@ -2,7 +2,7 @@ from toolbox import CatchException, update_ui, get_conf, select_api_key, get_log

from crazy_functions.multi_stage.multi_stage_utils import GptAcademicState

-def gen_image(llm_kwargs, prompt, resolution="1024x1024", model="dall-e-2", quality=None):

+def gen_image(llm_kwargs, prompt, resolution="1024x1024", model="dall-e-2", quality=None, style=None):

import requests, json, time, os

from request_llms.bridge_all import model_info

@@ -25,7 +25,10 @@ def gen_image(llm_kwargs, prompt, resolution="1024x1024", model="dall-e-2", qual

'model': model,

'response_format': 'url'

}

- if quality is not None: data.update({'quality': quality})

+ if quality is not None:

+ data['quality'] = quality

+ if style is not None:

+ data['style'] = style

response = requests.post(url, headers=headers, json=data, proxies=proxies)

print(response.content)

try:

@@ -115,13 +118,18 @@ def 图片生成_DALLE3(prompt, llm_kwargs, plugin_kwargs, chatbot, history, sys

chatbot.append(("您正在调用“图像生成”插件。", "[Local Message] 生成图像, 请先把模型切换至gpt-*或者api2d-*。如果中文Prompt效果不理想, 请尝试英文Prompt。正在处理中 ....."))

yield from update_ui(chatbot=chatbot, history=history) # 刷新界面 由于请求gpt需要一段时间,我们先及时地做一次界面更新

if ("advanced_arg" in plugin_kwargs) and (plugin_kwargs["advanced_arg"] == ""): plugin_kwargs.pop("advanced_arg")

- resolution = plugin_kwargs.get("advanced_arg", '1024x1024').lower()

- if resolution.endswith('-hd'):

- resolution = resolution.replace('-hd', '')

- quality = 'hd'

- else:

- quality = 'standard'

- image_url, image_path = gen_image(llm_kwargs, prompt, resolution, model="dall-e-3", quality=quality)

+ resolution_arg = plugin_kwargs.get("advanced_arg", '1024x1024-standard-vivid').lower()

+ parts = resolution_arg.split('-')

+ resolution = parts[0] # 解析分辨率

+ quality = 'standard' # 质量与风格默认值

+ style = 'vivid'

+ # 遍历检查是否有额外参数

+ for part in parts[1:]:

+ if part in ['hd', 'standard']:

+ quality = part

+ elif part in ['vivid', 'natural']:

+ style = part

+ image_url, image_path = gen_image(llm_kwargs, prompt, resolution, model="dall-e-3", quality=quality, style=style)

chatbot.append([prompt,

f'图像中转网址:

`{image_url}`

'+

f'中转网址预览:

'

@@ -201,4 +209,3 @@ def 图片修改_DALLE2(prompt, llm_kwargs, plugin_kwargs, chatbot, history, sys

f'本地文件预览:

'

])

yield from update_ui(chatbot=chatbot, history=history) # 刷新界面 界面更新

-

From 1134723c80cb9a68bd60a84e28b666f8cbe8be3e Mon Sep 17 00:00:00 2001

From: Skyzayre <120616113+Skyzayre@users.noreply.github.com>

Date: Fri, 1 Dec 2023 10:40:11 +0800

Subject: [PATCH 23/32] =?UTF-8?q?=E4=BF=AE=E6=94=B9docs=E4=B8=AD=E6=8F=92?=

=?UTF-8?q?=E4=BB=B6=E5=88=86=E7=B1=BB?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

---

docs/translate_english.json | 9 ++++-----

1 file changed, 4 insertions(+), 5 deletions(-)

diff --git a/docs/translate_english.json b/docs/translate_english.json

index 955dcaf9..400ec972 100644

--- a/docs/translate_english.json

+++ b/docs/translate_english.json

@@ -2183,9 +2183,8 @@

"找不到合适插件执行该任务": "Cannot find a suitable plugin to perform this task",

"接驳VoidTerminal": "Connect to VoidTerminal",

"**很好": "**Very good",

- "对话|编程": "Conversation|Programming",

- "对话|编程|学术": "Conversation|Programming|Academic",

- "4. 建议使用 GPT3.5 或更强的模型": "4. It is recommended to use GPT3.5 or a stronger model",

+ "对话&作图|编程": "Conversation&ImageGenerating|Programming",

+ "对话&作图|编程|学术": "Conversation&ImageGenerating|Programming|Academic", "4. 建议使用 GPT3.5 或更强的模型": "4. It is recommended to use GPT3.5 or a stronger model",

"「请调用插件翻译PDF论文": "Please call the plugin to translate the PDF paper",

"3. 如果您使用「调用插件xxx」、「修改配置xxx」、「请问」等关键词": "3. If you use keywords such as 'call plugin xxx', 'modify configuration xxx', 'please', etc.",

"以下是一篇学术论文的基本信息": "The following is the basic information of an academic paper",

@@ -2630,7 +2629,7 @@

"已经被记忆": "Already memorized",

"默认用英文的": "Default to English",

"错误追踪": "Error tracking",

- "对话|编程|学术|智能体": "Dialogue|Programming|Academic|Intelligent agent",

+ "对话&编程|编程|学术|智能体": "Conversation&ImageGenerating|Programming|Academic|Intelligent agent",

"请检查": "Please check",

"检测到被滞留的缓存文档": "Detected cached documents being left behind",

"还有哪些场合允许使用代理": "What other occasions allow the use of proxies",

@@ -2904,4 +2903,4 @@

"请配置ZHIPUAI_API_KEY": "Please configure ZHIPUAI_API_KEY",

"单个azure模型": "Single Azure model",

"预留参数 context 未实现": "Reserved parameter 'context' not implemented"

-}

\ No newline at end of file

+}

From 2aab6cb708c6f82de3bd181bab0232da7eb3ed9c Mon Sep 17 00:00:00 2001

From: Skyzayre <120616113+Skyzayre@users.noreply.github.com>

Date: Fri, 1 Dec 2023 10:50:20 +0800

Subject: [PATCH 24/32] =?UTF-8?q?=E4=BC=98=E5=8C=96=E9=83=A8=E5=88=86?=

=?UTF-8?q?=E7=BF=BB=E8=AF=91?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

---

docs/translate_english.json | 4 ++--

1 file changed, 2 insertions(+), 2 deletions(-)

diff --git a/docs/translate_english.json b/docs/translate_english.json

index 400ec972..bf09f66c 100644

--- a/docs/translate_english.json

+++ b/docs/translate_english.json

@@ -923,7 +923,7 @@

"的第": "The",

"个片段": "fragment",

"总结文章": "Summarize the article",

- "根据以上的对话": "According to the above dialogue",

+ "根据以上的对话": "According to the conversation above",

"的主要内容": "The main content of",

"所有文件都总结完成了吗": "Are all files summarized?",

"如果是.doc文件": "If it is a .doc file",

@@ -1501,7 +1501,7 @@

"发送请求到OpenAI后": "After sending the request to OpenAI",

"上下布局": "Vertical Layout",

"左右布局": "Horizontal Layout",

- "对话窗的高度": "Height of the Dialogue Window",

+ "对话窗的高度": "Height of the Conversation Window",

"重试的次数限制": "Retry Limit",

"gpt4现在只对申请成功的人开放": "GPT-4 is now only open to those who have successfully applied",

"提高限制请查询": "Please check for higher limits",

From d99b443b4cae5d7599b7060ae488ce14ab6d0a11 Mon Sep 17 00:00:00 2001

From: Skyzayre <120616113+Skyzayre@users.noreply.github.com>

Date: Fri, 1 Dec 2023 10:51:04 +0800

Subject: [PATCH 25/32] =?UTF-8?q?=E4=BC=98=E5=8C=96=E9=83=A8=E5=88=86?=

=?UTF-8?q?=E7=BF=BB=E8=AF=91?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

---

docs/translate_traditionalchinese.json | 6 +++---

1 file changed, 3 insertions(+), 3 deletions(-)

diff --git a/docs/translate_traditionalchinese.json b/docs/translate_traditionalchinese.json

index 9ca7cbaa..4edc65de 100644

--- a/docs/translate_traditionalchinese.json

+++ b/docs/translate_traditionalchinese.json

@@ -1043,9 +1043,9 @@

"jittorllms响应异常": "jittorllms response exception",

"在项目根目录运行这两个指令": "Run these two commands in the project root directory",

"获取tokenizer": "Get tokenizer",

- "chatbot 为WebUI中显示的对话列表": "chatbot is the list of dialogues displayed in WebUI",

+ "chatbot 为WebUI中显示的对话列表": "chatbot is the list of conversations displayed in WebUI",

"test_解析一个Cpp项目": "test_parse a Cpp project",

- "将对话记录history以Markdown格式写入文件中": "Write the dialogue record history to a file in Markdown format",

+ "将对话记录history以Markdown格式写入文件中": "Write the conversations record history to a file in Markdown format",

"装饰器函数": "Decorator function",

"玫瑰色": "Rose color",

"将单空行": "刪除單行空白",

@@ -2270,4 +2270,4 @@

"标注节点的行数范围": "標註節點的行數範圍",

"默认 True": "默認 True",

"将两个PDF拼接": "將兩個PDF拼接"

-}

\ No newline at end of file

+}

From da376068e1ae93ef09ea7c0f6a79657a4b52e5fc Mon Sep 17 00:00:00 2001

From: Alpha <1526147838@qq.com>

Date: Sat, 2 Dec 2023 21:31:59 +0800

Subject: [PATCH 26/32] =?UTF-8?q?=E4=BF=AE=E5=A4=8D=E4=BA=86qwen=E4=BD=BF?=

=?UTF-8?q?=E7=94=A8=E6=9C=AC=E5=9C=B0=E6=A8=A1=E5=9E=8B=E6=97=B6=E5=80=99?=

=?UTF-8?q?=E7=9A=84=E6=8A=A5=E9=94=99?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

---

config.py | 4 ++--

request_llms/bridge_qwen.py | 4 ++--

2 files changed, 4 insertions(+), 4 deletions(-)

diff --git a/config.py b/config.py

index f170a2bb..4284cb85 100644

--- a/config.py

+++ b/config.py

@@ -91,10 +91,10 @@ AVAIL_LLM_MODELS = ["gpt-3.5-turbo-1106","gpt-4-1106-preview","gpt-4-vision-prev

"gpt-3.5-turbo-16k", "gpt-3.5-turbo", "azure-gpt-3.5",

"api2d-gpt-3.5-turbo", 'api2d-gpt-3.5-turbo-16k',

"gpt-4", "gpt-4-32k", "azure-gpt-4", "api2d-gpt-4",

- "chatglm3", "moss", "claude-2"]

+ "chatglm3", "moss", "claude-2","qwen"]

# P.S. 其他可用的模型还包括 ["zhipuai", "qianfan", "deepseekcoder", "llama2", "qwen", "gpt-3.5-turbo-0613", "gpt-3.5-turbo-16k-0613", "gpt-3.5-random"

# "spark", "sparkv2", "sparkv3", "chatglm_onnx", "claude-1-100k", "claude-2", "internlm", "jittorllms_pangualpha", "jittorllms_llama"]

-

+# 如果你需要使用Qwen的本地模型,比如qwen1.8b,那么还需要在request_llms\bridge_qwen.py设置一下模型的路径!

# 定义界面上“询问多个GPT模型”插件应该使用哪些模型,请从AVAIL_LLM_MODELS中选择,并在不同模型之间用`&`间隔,例如"gpt-3.5-turbo&chatglm3&azure-gpt-4"

MULTI_QUERY_LLM_MODELS = "gpt-3.5-turbo&chatglm3"

diff --git a/request_llms/bridge_qwen.py b/request_llms/bridge_qwen.py

index 85a4d80c..d8408d8f 100644

--- a/request_llms/bridge_qwen.py

+++ b/request_llms/bridge_qwen.py

@@ -30,7 +30,7 @@ class GetQwenLMHandle(LocalLLMHandle):

from modelscope import AutoModelForCausalLM, AutoTokenizer, GenerationConfig

with ProxyNetworkActivate('Download_LLM'):

- model_id = 'qwen/Qwen-7B-Chat'

+ model_id = 'qwen/Qwen-7B-Chat' #在这里更改路径,如果你已经下载好了的话,同时,别忘记tokenizer

self._tokenizer = AutoTokenizer.from_pretrained('Qwen/Qwen-7B-Chat', trust_remote_code=True, resume_download=True)

# use fp16

model = AutoModelForCausalLM.from_pretrained(model_id, device_map="auto", trust_remote_code=True, fp16=True).eval()

@@ -51,7 +51,7 @@ class GetQwenLMHandle(LocalLLMHandle):

query, max_length, top_p, temperature, history = adaptor(kwargs)

- for response in self._model.chat(self._tokenizer, query, history=history, stream=True):

+ for response in self._model.chat_stream(self._tokenizer, query, history=history):

yield response

def try_to_import_special_deps(self, **kwargs):

From 94ab41d3c0f9ed7addc37f9a436ddd21e473ec2b Mon Sep 17 00:00:00 2001

From: Alpha <1526147838@qq.com>

Date: Sat, 2 Dec 2023 23:12:25 +0800

Subject: [PATCH 27/32] =?UTF-8?q?=E6=B7=BB=E5=8A=A0=E4=BA=86qwen1.8b?=

=?UTF-8?q?=E6=A8=A1=E5=9E=8B?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

---

README.md | 261 +++++++++---------

config.py | 9 +-

request_llms/bridge_all.py | 26 +-

request_llms/bridge_qwen_1_8B.py | 67 +++++

.../{bridge_qwen.py => bridge_qwen_7B.py} | 2 +-

tests/test_llms.py | 5 +-

6 files changed, 226 insertions(+), 144 deletions(-)

create mode 100644 request_llms/bridge_qwen_1_8B.py

rename request_llms/{bridge_qwen.py => bridge_qwen_7B.py} (99%)

diff --git a/README.md b/README.md

index 54bf7c1f..c7fb9c7c 100644

--- a/README.md

+++ b/README.md

@@ -1,7 +1,7 @@

> **Caution**

->

-> 2023.11.12: 某些依赖包尚不兼容python 3.12,推荐python 3.11。

->

+>

+> 2023.11.12: 某些依赖包尚不兼容 python 3.12,推荐 python 3.11。

+>

> 2023.11.7: 安装依赖时,请选择`requirements.txt`中**指定的版本**。 安装命令:`pip install -r requirements.txt`。本项目开源免费,近期发现有人蔑视开源协议并利用本项目违规圈钱,请提高警惕,谨防上当受骗。

@@ -24,7 +24,6 @@

[Installation-image]: https://img.shields.io/badge/Installation-v3.6.1-blue?style=flat-square

[Wiki-image]: https://img.shields.io/badge/wiki-项目文档-black?style=flat-square

[PRs-image]: https://img.shields.io/badge/PRs-welcome-pink?style=flat-square

-

[License-url]: https://github.com/binary-husky/gpt_academic/blob/master/LICENSE

[Github-url]: https://github.com/binary-husky/gpt_academic

[Releases-url]: https://github.com/binary-husky/gpt_academic/releases

@@ -32,65 +31,62 @@

[Wiki-url]: https://github.com/binary-husky/gpt_academic/wiki

[PRs-url]: https://github.com/binary-husky/gpt_academic/pulls

-

-功能(⭐= 近期新增功能) | 描述

---- | ---

-⭐[接入新模型](https://github.com/binary-husky/gpt_academic/wiki/%E5%A6%82%E4%BD%95%E5%88%87%E6%8D%A2%E6%A8%A1%E5%9E%8B) | 百度[千帆](https://cloud.baidu.com/doc/WENXINWORKSHOP/s/Nlks5zkzu)与文心一言, 通义千问[Qwen](https://modelscope.cn/models/qwen/Qwen-7B-Chat/summary),上海AI-Lab[书生](https://github.com/InternLM/InternLM),讯飞[星火](https://xinghuo.xfyun.cn/),[LLaMa2](https://huggingface.co/meta-llama/Llama-2-7b-chat-hf),[智谱API](https://open.bigmodel.cn/),DALLE3, [DeepseekCoder](https://coder.deepseek.com/)

-润色、翻译、代码解释 | 一键润色、翻译、查找论文语法错误、解释代码

-[自定义快捷键](https://www.bilibili.com/video/BV14s4y1E7jN) | 支持自定义快捷键

-模块化设计 | 支持自定义强大的[插件](https://github.com/binary-husky/gpt_academic/tree/master/crazy_functions),插件支持[热更新](https://github.com/binary-husky/gpt_academic/wiki/%E5%87%BD%E6%95%B0%E6%8F%92%E4%BB%B6%E6%8C%87%E5%8D%97)

-[程序剖析](https://www.bilibili.com/video/BV1cj411A7VW) | [插件] 一键剖析Python/C/C++/Java/Lua/...项目树 或 [自我剖析](https://www.bilibili.com/video/BV1cj411A7VW)

-读论文、[翻译](https://www.bilibili.com/video/BV1KT411x7Wn)论文 | [插件] 一键解读latex/pdf论文全文并生成摘要

-Latex全文[翻译](https://www.bilibili.com/video/BV1nk4y1Y7Js/)、[润色](https://www.bilibili.com/video/BV1FT411H7c5/) | [插件] 一键翻译或润色latex论文

-批量注释生成 | [插件] 一键批量生成函数注释

-Markdown[中英互译](https://www.bilibili.com/video/BV1yo4y157jV/) | [插件] 看到上面5种语言的[README](https://github.com/binary-husky/gpt_academic/blob/master/docs/README_EN.md)了吗?就是出自他的手笔

-chat分析报告生成 | [插件] 运行后自动生成总结汇报

-[PDF论文全文翻译功能](https://www.bilibili.com/video/BV1KT411x7Wn) | [插件] PDF论文提取题目&摘要+翻译全文(多线程)

-[Arxiv小助手](https://www.bilibili.com/video/BV1LM4y1279X) | [插件] 输入arxiv文章url即可一键翻译摘要+下载PDF

-Latex论文一键校对 | [插件] 仿Grammarly对Latex文章进行语法、拼写纠错+输出对照PDF

-[谷歌学术统合小助手](https://www.bilibili.com/video/BV19L411U7ia) | [插件] 给定任意谷歌学术搜索页面URL,让gpt帮你[写relatedworks](https://www.bilibili.com/video/BV1GP411U7Az/)

-互联网信息聚合+GPT | [插件] 一键[让GPT从互联网获取信息](https://www.bilibili.com/video/BV1om4y127ck)回答问题,让信息永不过时

-⭐Arxiv论文精细翻译 ([Docker](https://github.com/binary-husky/gpt_academic/pkgs/container/gpt_academic_with_latex)) | [插件] 一键[以超高质量翻译arxiv论文](https://www.bilibili.com/video/BV1dz4y1v77A/),目前最好的论文翻译工具

-⭐[实时语音对话输入](https://github.com/binary-husky/gpt_academic/blob/master/docs/use_audio.md) | [插件] 异步[监听音频](https://www.bilibili.com/video/BV1AV4y187Uy/),自动断句,自动寻找回答时机

-公式/图片/表格显示 | 可以同时显示公式的[tex形式和渲染形式](https://user-images.githubusercontent.com/96192199/230598842-1d7fcddd-815d-40ee-af60-baf488a199df.png),支持公式、代码高亮

-⭐AutoGen多智能体插件 | [插件] 借助微软AutoGen,探索多Agent的智能涌现可能!

-启动暗色[主题](https://github.com/binary-husky/gpt_academic/issues/173) | 在浏览器url后面添加```/?__theme=dark```可以切换dark主题

-[多LLM模型](https://www.bilibili.com/video/BV1wT411p7yf)支持 | 同时被GPT3.5、GPT4、[清华ChatGLM2](https://github.com/THUDM/ChatGLM2-6B)、[复旦MOSS](https://github.com/OpenLMLab/MOSS)伺候的感觉一定会很不错吧?

-⭐ChatGLM2微调模型 | 支持加载ChatGLM2微调模型,提供ChatGLM2微调辅助插件

-更多LLM模型接入,支持[huggingface部署](https://huggingface.co/spaces/qingxu98/gpt-academic) | 加入Newbing接口(新必应),引入清华[Jittorllms](https://github.com/Jittor/JittorLLMs)支持[LLaMA](https://github.com/facebookresearch/llama)和[盘古α](https://openi.org.cn/pangu/)

-⭐[void-terminal](https://github.com/binary-husky/void-terminal) pip包 | 脱离GUI,在Python中直接调用本项目的所有函数插件(开发中)

-⭐虚空终端插件 | [插件] 能够使用自然语言直接调度本项目其他插件

-更多新功能展示 (图像生成等) …… | 见本文档结尾处 ……

+| 功能(⭐= 近期新增功能) | 描述 |

+| ------------------------------------------------------------------------------------------------------------------------ | --------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

+| ⭐[接入新模型](https://github.com/binary-husky/gpt_academic/wiki/%E5%A6%82%E4%BD%95%E5%88%87%E6%8D%A2%E6%A8%A1%E5%9E%8B) | 百度[千帆](https://cloud.baidu.com/doc/WENXINWORKSHOP/s/Nlks5zkzu)与文心一言, 通义千问[Qwen-7B](https://modelscope.cn/models/qwen/Qwen-7B-Chat/summary),通义千问[Qwen-1_8B](https://modelscope.cn/models/qwen/Qwen-1_8B-Chat/summary),上海 AI-Lab[书生](https://github.com/InternLM/InternLM),讯飞[星火](https://xinghuo.xfyun.cn/),[LLaMa2](https://huggingface.co/meta-llama/Llama-2-7b-chat-hf),[智谱 API](https://open.bigmodel.cn/),DALLE3, [DeepseekCoder](https://coder.deepseek.com/) |

+| 润色、翻译、代码解释 | 一键润色、翻译、查找论文语法错误、解释代码 |

+| [自定义快捷键](https://www.bilibili.com/video/BV14s4y1E7jN) | 支持自定义快捷键 |

+| 模块化设计 | 支持自定义强大的[插件](https://github.com/binary-husky/gpt_academic/tree/master/crazy_functions),插件支持[热更新](https://github.com/binary-husky/gpt_academic/wiki/%E5%87%BD%E6%95%B0%E6%8F%92%E4%BB%B6%E6%8C%87%E5%8D%97) |

+| [程序剖析](https://www.bilibili.com/video/BV1cj411A7VW) | [插件] 一键剖析 Python/C/C++/Java/Lua/...项目树 或 [自我剖析](https://www.bilibili.com/video/BV1cj411A7VW) |

+| 读论文、[翻译](https://www.bilibili.com/video/BV1KT411x7Wn)论文 | [插件] 一键解读 latex/pdf 论文全文并生成摘要 |

+| Latex 全文[翻译](https://www.bilibili.com/video/BV1nk4y1Y7Js/)、[润色](https://www.bilibili.com/video/BV1FT411H7c5/) | [插件] 一键翻译或润色 latex 论文 |

+| 批量注释生成 | [插件] 一键批量生成函数注释 |

+| Markdown[中英互译](https://www.bilibili.com/video/BV1yo4y157jV/) | [插件] 看到上面 5 种语言的[README](https://github.com/binary-husky/gpt_academic/blob/master/docs/README_EN.md)了吗?就是出自他的手笔 |

+| chat 分析报告生成 | [插件] 运行后自动生成总结汇报 |

+| [PDF 论文全文翻译功能](https://www.bilibili.com/video/BV1KT411x7Wn) | [插件] PDF 论文提取题目&摘要+翻译全文(多线程) |

+| [Arxiv 小助手](https://www.bilibili.com/video/BV1LM4y1279X) | [插件] 输入 arxiv 文章 url 即可一键翻译摘要+下载 PDF |

+| Latex 论文一键校对 | [插件] 仿 Grammarly 对 Latex 文章进行语法、拼写纠错+输出对照 PDF |

+| [谷歌学术统合小助手](https://www.bilibili.com/video/BV19L411U7ia) | [插件] 给定任意谷歌学术搜索页面 URL,让 gpt 帮你[写 relatedworks](https://www.bilibili.com/video/BV1GP411U7Az/) |

+| 互联网信息聚合+GPT | [插件] 一键[让 GPT 从互联网获取信息](https://www.bilibili.com/video/BV1om4y127ck)回答问题,让信息永不过时 |

+| ⭐Arxiv 论文精细翻译 ([Docker](https://github.com/binary-husky/gpt_academic/pkgs/container/gpt_academic_with_latex)) | [插件] 一键[以超高质量翻译 arxiv 论文](https://www.bilibili.com/video/BV1dz4y1v77A/),目前最好的论文翻译工具 |

+| ⭐[实时语音对话输入](https://github.com/binary-husky/gpt_academic/blob/master/docs/use_audio.md) | [插件] 异步[监听音频](https://www.bilibili.com/video/BV1AV4y187Uy/),自动断句,自动寻找回答时机 |

+| 公式/图片/表格显示 | 可以同时显示公式的[tex 形式和渲染形式](https://user-images.githubusercontent.com/96192199/230598842-1d7fcddd-815d-40ee-af60-baf488a199df.png),支持公式、代码高亮 |

+| ⭐AutoGen 多智能体插件 | [插件] 借助微软 AutoGen,探索多 Agent 的智能涌现可能! |

+| 启动暗色[主题](https://github.com/binary-husky/gpt_academic/issues/173) | 在浏览器 url 后面添加`/?__theme=dark`可以切换 dark 主题 |

+| [多 LLM 模型](https://www.bilibili.com/video/BV1wT411p7yf)支持 | 同时被 GPT3.5、GPT4、[清华 ChatGLM2](https://github.com/THUDM/ChatGLM2-6B)、[复旦 MOSS](https://github.com/OpenLMLab/MOSS)伺候的感觉一定会很不错吧? |

+| ⭐ChatGLM2 微调模型 | 支持加载 ChatGLM2 微调模型,提供 ChatGLM2 微调辅助插件 |

+| 更多 LLM 模型接入,支持[huggingface 部署](https://huggingface.co/spaces/qingxu98/gpt-academic) | 加入 Newbing 接口(新必应),引入清华[Jittorllms](https://github.com/Jittor/JittorLLMs)支持[LLaMA](https://github.com/facebookresearch/llama)和[盘古 α](https://openi.org.cn/pangu/) |

+| ⭐[void-terminal](https://github.com/binary-husky/void-terminal) pip 包 | 脱离 GUI,在 Python 中直接调用本项目的所有函数插件(开发中) |

+| ⭐ 虚空终端插件 | [插件] 能够使用自然语言直接调度本项目其他插件 |

+| 更多新功能展示 (图像生成等) …… | 见本文档结尾处 …… |

+

-

-- 新界面(修改`config.py`中的LAYOUT选项即可实现“左右布局”和“上下布局”的切换)

+- 新界面(修改`config.py`中的 LAYOUT 选项即可实现“左右布局”和“上下布局”的切换)

===>

@@ -270,7 +267,7 @@ Tip:不指定文件直接点击 `载入对话历史存档` 可以查看历史h

3. 虚空终端(从自然语言输入中,理解用户意图+自动调用其他插件)

-- 步骤一:输入 “ 请调用插件翻译PDF论文,地址为https://openreview.net/pdf?id=rJl0r3R9KX ”

+- 步骤一:输入 “ 请调用插件翻译 PDF 论文,地址为https://openreview.net/pdf?id=rJl0r3R9KX ”

- 步骤二:点击“虚空终端”

@@ -294,17 +291,17 @@ Tip:不指定文件直接点击 `载入对话历史存档` 可以查看历史h

-7. OpenAI图像生成

+7. OpenAI 图像生成

-8. OpenAI音频解析与总结

+8. OpenAI 音频解析与总结

-9. Latex全文校对纠错

+9. Latex 全文校对纠错

===>

@@ -315,47 +312,46 @@ Tip:不指定文件直接点击 `载入对话历史存档` 可以查看历史h

-

-

### II:版本:

-- version 3.70(todo): 优化AutoGen插件主题并设计一系列衍生插件

-- version 3.60: 引入AutoGen作为新一代插件的基石

-- version 3.57: 支持GLM3,星火v3,文心一言v4,修复本地模型的并发BUG

-- version 3.56: 支持动态追加基础功能按钮,新汇报PDF汇总页面

+- version 3.70(todo): 优化 AutoGen 插件主题并设计一系列衍生插件

+- version 3.60: 引入 AutoGen 作为新一代插件的基石

+- version 3.57: 支持 GLM3,星火 v3,文心一言 v4,修复本地模型的并发 BUG

+- version 3.56: 支持动态追加基础功能按钮,新汇报 PDF 汇总页面

- version 3.55: 重构前端界面,引入悬浮窗口与菜单栏

- version 3.54: 新增动态代码解释器(Code Interpreter)(待完善)

- version 3.53: 支持动态选择不同界面主题,提高稳定性&解决多用户冲突问题

-- version 3.50: 使用自然语言调用本项目的所有函数插件(虚空终端),支持插件分类,改进UI,设计新主题

+- version 3.50: 使用自然语言调用本项目的所有函数插件(虚空终端),支持插件分类,改进 UI,设计新主题

- version 3.49: 支持百度千帆平台和文心一言

-- version 3.48: 支持阿里达摩院通义千问,上海AI-Lab书生,讯飞星火

+- version 3.48: 支持阿里达摩院通义千问,上海 AI-Lab 书生,讯飞星火

- version 3.46: 支持完全脱手操作的实时语音对话

-- version 3.45: 支持自定义ChatGLM2微调模型

-- version 3.44: 正式支持Azure,优化界面易用性

-- version 3.4: +arxiv论文翻译、latex论文批改功能

+- version 3.45: 支持自定义 ChatGLM2 微调模型

+- version 3.44: 正式支持 Azure,优化界面易用性

+- version 3.4: +arxiv 论文翻译、latex 论文批改功能

- version 3.3: +互联网信息综合功能

-- version 3.2: 函数插件支持更多参数接口 (保存对话功能, 解读任意语言代码+同时询问任意的LLM组合)

-- version 3.1: 支持同时问询多个gpt模型!支持api2d,支持多个apikey负载均衡

-- version 3.0: 对chatglm和其他小型llm的支持

+- version 3.2: 函数插件支持更多参数接口 (保存对话功能, 解读任意语言代码+同时询问任意的 LLM 组合)

+- version 3.1: 支持同时问询多个 gpt 模型!支持 api2d,支持多个 apikey 负载均衡

+- version 3.0: 对 chatglm 和其他小型 llm 的支持

- version 2.6: 重构了插件结构,提高了交互性,加入更多插件

-- version 2.5: 自更新,解决总结大工程源代码时文本过长、token溢出的问题

-- version 2.4: 新增PDF全文翻译功能; 新增输入区切换位置的功能

+- version 2.5: 自更新,解决总结大工程源代码时文本过长、token 溢出的问题

+- version 2.4: 新增 PDF 全文翻译功能; 新增输入区切换位置的功能

- version 2.3: 增强多线程交互性

- version 2.2: 函数插件支持热重载

- version 2.1: 可折叠式布局

- version 2.0: 引入模块化函数插件

- version 1.0: 基础功能

-GPT Academic开发者QQ群:`610599535`

+GPT Academic 开发者 QQ 群:`610599535`

- 已知问题

- - 某些浏览器翻译插件干扰此软件前端的运行

- - 官方Gradio目前有很多兼容性问题,请**务必使用`requirement.txt`安装Gradio**

+ - 某些浏览器翻译插件干扰此软件前端的运行

+ - 官方 Gradio 目前有很多兼容性问题,请**务必使用`requirement.txt`安装 Gradio**

### III:主题

-可以通过修改`THEME`选项(config.py)变更主题

-1. `Chuanhu-Small-and-Beautiful` [网址](https://github.com/GaiZhenbiao/ChuanhuChatGPT/)

+可以通过修改`THEME`选项(config.py)变更主题

+

+1. `Chuanhu-Small-and-Beautiful` [网址](https://github.com/GaiZhenbiao/ChuanhuChatGPT/)

### IV:本项目的开发分支

@@ -363,7 +359,6 @@ GPT Academic开发者QQ群:`610599535`

2. `frontier` 分支: 开发分支,测试版

3. 如何接入其他大模型:[接入其他大模型](request_llms/README.md)

-

### V:参考与学习

```

diff --git a/config.py b/config.py

index 4284cb85..45365f5e 100644

--- a/config.py

+++ b/config.py

@@ -91,10 +91,10 @@ AVAIL_LLM_MODELS = ["gpt-3.5-turbo-1106","gpt-4-1106-preview","gpt-4-vision-prev

"gpt-3.5-turbo-16k", "gpt-3.5-turbo", "azure-gpt-3.5",

"api2d-gpt-3.5-turbo", 'api2d-gpt-3.5-turbo-16k',

"gpt-4", "gpt-4-32k", "azure-gpt-4", "api2d-gpt-4",

- "chatglm3", "moss", "claude-2","qwen"]

-# P.S. 其他可用的模型还包括 ["zhipuai", "qianfan", "deepseekcoder", "llama2", "qwen", "gpt-3.5-turbo-0613", "gpt-3.5-turbo-16k-0613", "gpt-3.5-random"

+ "chatglm3", "moss", "claude-2","qwen-1_8B","qwen-7B"]

+# P.S. 其他可用的模型还包括 ["zhipuai", "qianfan", "deepseekcoder", "llama2", "gpt-3.5-turbo-0613", "gpt-3.5-turbo-16k-0613", "gpt-3.5-random"

# "spark", "sparkv2", "sparkv3", "chatglm_onnx", "claude-1-100k", "claude-2", "internlm", "jittorllms_pangualpha", "jittorllms_llama"]

-# 如果你需要使用Qwen的本地模型,比如qwen1.8b,那么还需要在request_llms\bridge_qwen.py设置一下模型的路径!

+# 如果你需要使用Qwen的本地模型,比如qwen1.8b,那么还需要在request_llms下找到对应的文件,设置一下模型的路径!

# 定义界面上“询问多个GPT模型”插件应该使用哪些模型,请从AVAIL_LLM_MODELS中选择,并在不同模型之间用`&`间隔,例如"gpt-3.5-turbo&chatglm3&azure-gpt-4"

MULTI_QUERY_LLM_MODELS = "gpt-3.5-turbo&chatglm3"

@@ -291,7 +291,8 @@ NUM_CUSTOM_BASIC_BTN = 4

├── "jittorllms_pangualpha"

├── "jittorllms_llama"

├── "deepseekcoder"

-├── "qwen"

+├── "qwen-1_8B"

+├── "qwen-7B"

├── RWKV的支持见Wiki

└── "llama2"

diff --git a/request_llms/bridge_all.py b/request_llms/bridge_all.py

index 8dece548..f20ca651 100644

--- a/request_llms/bridge_all.py

+++ b/request_llms/bridge_all.py

@@ -431,12 +431,12 @@ if "chatglm_onnx" in AVAIL_LLM_MODELS:

})

except:

print(trimmed_format_exc())

-if "qwen" in AVAIL_LLM_MODELS:

+if "qwen-1_8B" in AVAIL_LLM_MODELS: # qwen-1.8B

try:

- from .bridge_qwen import predict_no_ui_long_connection as qwen_noui

- from .bridge_qwen import predict as qwen_ui

+ from .bridge_qwen_1_8B import predict_no_ui_long_connection as qwen_noui

+ from .bridge_qwen_1_8B import predict as qwen_ui

model_info.update({

- "qwen": {

+ "qwen-1_8B": {

"fn_with_ui": qwen_ui,

"fn_without_ui": qwen_noui,

"endpoint": None,

@@ -447,6 +447,24 @@ if "qwen" in AVAIL_LLM_MODELS:

})

except:

print(trimmed_format_exc())

+

+if "qwen-7B" in AVAIL_LLM_MODELS: # qwen-7B

+ try:

+ from .bridge_qwen_7B import predict_no_ui_long_connection as qwen_noui

+ from .bridge_qwen_7B import predict as qwen_ui

+ model_info.update({

+ "qwen-7B": {

+ "fn_with_ui": qwen_ui,

+ "fn_without_ui": qwen_noui,

+ "endpoint": None,

+ "max_token": 4096,

+ "tokenizer": tokenizer_gpt35,

+ "token_cnt": get_token_num_gpt35,

+ }

+ })

+ except:

+ print(trimmed_format_exc())

+

if "chatgpt_website" in AVAIL_LLM_MODELS: # 接入一些逆向工程https://github.com/acheong08/ChatGPT-to-API/

try:

from .bridge_chatgpt_website import predict_no_ui_long_connection as chatgpt_website_noui

diff --git a/request_llms/bridge_qwen_1_8B.py b/request_llms/bridge_qwen_1_8B.py

new file mode 100644

index 00000000..06288305

--- /dev/null

+++ b/request_llms/bridge_qwen_1_8B.py

@@ -0,0 +1,67 @@

+model_name = "Qwen1_8B"

+cmd_to_install = "`pip install -r request_llms/requirements_qwen.txt`"

+

+

+from transformers import AutoModel, AutoTokenizer

+import time

+import threading

+import importlib

+from toolbox import update_ui, get_conf, ProxyNetworkActivate

+from multiprocessing import Process, Pipe

+from .local_llm_class import LocalLLMHandle, get_local_llm_predict_fns

+

+

+

+# ------------------------------------------------------------------------------------------------------------------------

+# 🔌💻 Local Model

+# ------------------------------------------------------------------------------------------------------------------------

+class GetQwenLMHandle(LocalLLMHandle):

+

+ def load_model_info(self):

+ # 🏃♂️🏃♂️🏃♂️ 子进程执行

+ self.model_name = model_name

+ self.cmd_to_install = cmd_to_install

+

+ def load_model_and_tokenizer(self):

+ # 🏃♂️🏃♂️🏃♂️ 子进程执行

+ import os, glob

+ import os

+ import platform

+ from modelscope import AutoModelForCausalLM, AutoTokenizer, GenerationConfig

+

+ with ProxyNetworkActivate('Download_LLM'):

+ model_id = 'Qwen/Qwen-1_8B-Chat'

+ self._tokenizer = AutoTokenizer.from_pretrained('Qwen/Qwen-1_8B-Chat', trust_remote_code=True, resume_download=True)

+ # use fp16

+ model = AutoModelForCausalLM.from_pretrained(model_id, device_map="auto", trust_remote_code=True, fp16=True).eval()

+ model.generation_config = GenerationConfig.from_pretrained(model_id, trust_remote_code=True) # 可指定不同的生成长度、top_p等相关超参

+ self._model = model

+

+ return self._model, self._tokenizer

+

+ def llm_stream_generator(self, **kwargs):

+ # 🏃♂️🏃♂️🏃♂️ 子进程执行

+ def adaptor(kwargs):

+ query = kwargs['query']

+ max_length = kwargs['max_length']

+ top_p = kwargs['top_p']

+ temperature = kwargs['temperature']

+ history = kwargs['history']

+ return query, max_length, top_p, temperature, history

+

+ query, max_length, top_p, temperature, history = adaptor(kwargs)

+

+ for response in self._model.chat_stream(self._tokenizer, query, history=history):

+ yield response

+

+ def try_to_import_special_deps(self, **kwargs):

+ # import something that will raise error if the user does not install requirement_*.txt

+ # 🏃♂️🏃♂️🏃♂️ 主进程执行

+ import importlib

+ importlib.import_module('modelscope')

+

+

+# ------------------------------------------------------------------------------------------------------------------------

+# 🔌💻 GPT-Academic Interface

+# ------------------------------------------------------------------------------------------------------------------------

+predict_no_ui_long_connection, predict = get_local_llm_predict_fns(GetQwenLMHandle, model_name)

\ No newline at end of file

diff --git a/request_llms/bridge_qwen.py b/request_llms/bridge_qwen_7B.py

similarity index 99%

rename from request_llms/bridge_qwen.py

rename to request_llms/bridge_qwen_7B.py

index d8408d8f..dfe1fc44 100644

--- a/request_llms/bridge_qwen.py

+++ b/request_llms/bridge_qwen_7B.py

@@ -1,4 +1,4 @@

-model_name = "Qwen"

+model_name = "Qwen-7B"

cmd_to_install = "`pip install -r request_llms/requirements_qwen.txt`"

diff --git a/tests/test_llms.py b/tests/test_llms.py

index 8b685972..2426cc3d 100644

--- a/tests/test_llms.py

+++ b/tests/test_llms.py

@@ -16,8 +16,9 @@ if __name__ == "__main__":

# from request_llms.bridge_jittorllms_llama import predict_no_ui_long_connection

# from request_llms.bridge_claude import predict_no_ui_long_connection

# from request_llms.bridge_internlm import predict_no_ui_long_connection

- from request_llms.bridge_deepseekcoder import predict_no_ui_long_connection

- # from request_llms.bridge_qwen import predict_no_ui_long_connection

+ # from request_llms.bridge_deepseekcoder import predict_no_ui_long_connection

+ # from request_llms.bridge_qwen_7B import predict_no_ui_long_connection

+ from request_llms.bridge_qwen_1_8B import predict_no_ui_long_connection

# from request_llms.bridge_spark import predict_no_ui_long_connection

# from request_llms.bridge_zhipu import predict_no_ui_long_connection

# from request_llms.bridge_chatglm3 import predict_no_ui_long_connection

From 0cd3274d04830aacd1f0e1e683f23665432013f7 Mon Sep 17 00:00:00 2001

From: binary-husky

Date: Mon, 4 Dec 2023 10:30:02 +0800

Subject: [PATCH 28/32] combine qwen model family

---

config.py | 8 +++++--

.../{bridge_qwen_7B.py => bridge_qwen.py} | 24 +++++++------------

request_llms/requirements_qwen.txt | 4 +++-

3 files changed, 17 insertions(+), 19 deletions(-)

rename request_llms/{bridge_qwen_7B.py => bridge_qwen.py} (77%)

diff --git a/config.py b/config.py

index f170a2bb..44e9f079 100644

--- a/config.py

+++ b/config.py

@@ -15,13 +15,13 @@ API_KEY = "此处填API密钥" # 可同时填写多个API-KEY,用英文逗

USE_PROXY = False

if USE_PROXY:

"""

+ 代理网络的地址,打开你的代理软件查看代理协议(socks5h / http)、地址(localhost)和端口(11284)

填写格式是 [协议]:// [地址] :[端口],填写之前不要忘记把USE_PROXY改成True,如果直接在海外服务器部署,此处不修改

<配置教程&视频教程> https://github.com/binary-husky/gpt_academic/issues/1>

[协议] 常见协议无非socks5h/http; 例如 v2**y 和 ss* 的默认本地协议是socks5h; 而cl**h 的默认本地协议是http

- [地址] 懂的都懂,不懂就填localhost或者127.0.0.1肯定错不了(localhost意思是代理软件安装在本机上)

+ [地址] 填localhost或者127.0.0.1(localhost意思是代理软件安装在本机上)

[端口] 在代理软件的设置里找。虽然不同的代理软件界面不一样,但端口号都应该在最显眼的位置上

"""

- # 代理网络的地址,打开你的*学*网软件查看代理的协议(socks5h / http)、地址(localhost)和端口(11284)

proxies = {

# [协议]:// [地址] :[端口]

"http": "socks5h://localhost:11284", # 再例如 "http": "http://127.0.0.1:7890",

@@ -100,6 +100,10 @@ AVAIL_LLM_MODELS = ["gpt-3.5-turbo-1106","gpt-4-1106-preview","gpt-4-vision-prev

MULTI_QUERY_LLM_MODELS = "gpt-3.5-turbo&chatglm3"

+# 选择本地模型变体(只有当AVAIL_LLM_MODELS包含了对应本地模型时,才会起作用)

+QWEN_MODEL_SELECTION = "Qwen/Qwen-1_8B-Chat-Int8"

+

+

# 百度千帆(LLM_MODEL="qianfan")

BAIDU_CLOUD_API_KEY = ''

BAIDU_CLOUD_SECRET_KEY = ''

diff --git a/request_llms/bridge_qwen_7B.py b/request_llms/bridge_qwen.py

similarity index 77%

rename from request_llms/bridge_qwen_7B.py

rename to request_llms/bridge_qwen.py

index dfe1fc44..1bd846be 100644

--- a/request_llms/bridge_qwen_7B.py

+++ b/request_llms/bridge_qwen.py

@@ -1,13 +1,7 @@

-model_name = "Qwen-7B"

+model_name = "Qwen"

cmd_to_install = "`pip install -r request_llms/requirements_qwen.txt`"

-

-from transformers import AutoModel, AutoTokenizer

-import time

-import threading

-import importlib

-from toolbox import update_ui, get_conf, ProxyNetworkActivate

-from multiprocessing import Process, Pipe

+from toolbox import ProxyNetworkActivate, get_conf

from .local_llm_class import LocalLLMHandle, get_local_llm_predict_fns

@@ -24,16 +18,14 @@ class GetQwenLMHandle(LocalLLMHandle):

def load_model_and_tokenizer(self):

# 🏃♂️🏃♂️🏃♂️ 子进程执行

- import os, glob

- import os

- import platform

- from modelscope import AutoModelForCausalLM, AutoTokenizer, GenerationConfig

-

+ # from modelscope import AutoModelForCausalLM, AutoTokenizer, GenerationConfig

+ from transformers import AutoModelForCausalLM, AutoTokenizer

+ from transformers.generation import GenerationConfig

with ProxyNetworkActivate('Download_LLM'):

- model_id = 'qwen/Qwen-7B-Chat' #在这里更改路径,如果你已经下载好了的话,同时,别忘记tokenizer

- self._tokenizer = AutoTokenizer.from_pretrained('Qwen/Qwen-7B-Chat', trust_remote_code=True, resume_download=True)

+ model_id = get_conf('QWEN_MODEL_SELECTION') #在这里更改路径,如果你已经下载好了的话,同时,别忘记tokenizer

+ self._tokenizer = AutoTokenizer.from_pretrained(model_id, trust_remote_code=True, resume_download=True)

# use fp16

- model = AutoModelForCausalLM.from_pretrained(model_id, device_map="auto", trust_remote_code=True, fp16=True).eval()

+ model = AutoModelForCausalLM.from_pretrained(model_id, device_map="auto", trust_remote_code=True).eval()

model.generation_config = GenerationConfig.from_pretrained(model_id, trust_remote_code=True) # 可指定不同的生成长度、top_p等相关超参

self._model = model

diff --git a/request_llms/requirements_qwen.txt b/request_llms/requirements_qwen.txt

index 3d7d62a0..ea65dee7 100644

--- a/request_llms/requirements_qwen.txt

+++ b/request_llms/requirements_qwen.txt

@@ -1,2 +1,4 @@

modelscope

-transformers_stream_generator

\ No newline at end of file

+transformers_stream_generator

+auto-gptq

+optimum

\ No newline at end of file

From 95504f0bb75a2835e168b033d5c945d58170a451 Mon Sep 17 00:00:00 2001

From: Skyzayre <120616113+Skyzayre@users.noreply.github.com>

Date: Mon, 4 Dec 2023 10:31:12 +0800

Subject: [PATCH 29/32] Resolve conflicts

---

crazy_functions/图片生成.py | 74 +++++++++++++++++++++++++++++++------

1 file changed, 62 insertions(+), 12 deletions(-)

diff --git a/crazy_functions/图片生成.py b/crazy_functions/图片生成.py

index 104d4034..d5c4eb05 100644

--- a/crazy_functions/图片生成.py

+++ b/crazy_functions/图片生成.py

@@ -150,18 +150,27 @@ class ImageEditState(GptAcademicState):

file = None if not confirm else file_manifest[0]

return confirm, file

+ def lock_plugin(self, chatbot):

+ chatbot._cookies['lock_plugin'] = 'crazy_functions.图片生成->图片修改_DALLE2'

+ self.dump_state(chatbot)

+

+ def unlock_plugin(self, chatbot):

+ self.reset()

+ chatbot._cookies['lock_plugin'] = None

+ self.dump_state(chatbot)

+

def get_resolution(self, x):

return (x in ['256x256', '512x512', '1024x1024']), x

-

+

def get_prompt(self, x):

confirm = (len(x)>=5) and (not self.get_resolution(x)[0]) and (not self.get_image_file(x)[0])

return confirm, x

-

+

def reset(self):

self.req = [

- {'value':None, 'description': '请先上传图像(必须是.png格式), 然后再次点击本插件', 'verify_fn': self.get_image_file},

- {'value':None, 'description': '请输入分辨率,可选:256x256, 512x512 或 1024x1024', 'verify_fn': self.get_resolution},

- {'value':None, 'description': '请输入修改需求,建议您使用英文提示词', 'verify_fn': self.get_prompt},

+ {'value':None, 'description': '请先上传图像(必须是.png格式), 然后再次点击本插件', 'verify_fn': self.get_image_file},

+ {'value':None, 'description': '请输入分辨率,可选:256x256, 512x512 或 1024x1024, 然后再次点击本插件', 'verify_fn': self.get_resolution},

+ {'value':None, 'description': '请输入修改需求,建议您使用英文提示词, 然后再次点击本插件', 'verify_fn': self.get_prompt},

]

self.info = ""

@@ -171,7 +180,7 @@ class ImageEditState(GptAcademicState):

confirm, res = r['verify_fn'](prompt)

if confirm:

r['value'] = res

- self.set_state(chatbot, 'dummy_key', 'dummy_value')

+ self.dump_state(chatbot)

break

return self

@@ -190,22 +199,63 @@ def 图片修改_DALLE2(prompt, llm_kwargs, plugin_kwargs, chatbot, history, sys

history = [] # 清空历史

state = ImageEditState.get_state(chatbot, ImageEditState)

state = state.feed(prompt, chatbot)

+ state.lock_plugin(chatbot)

if not state.already_obtained_all_materials():

- chatbot.append(["图片修改(先上传图片,再输入修改需求,最后输入分辨率)", state.next_req()])

+ chatbot.append(["图片修改\n\n1. 上传图片(图片中需要修改的位置用橡皮擦擦除为纯白色,即RGB=255,255,255)\n2. 输入分辨率 \n3. 输入修改需求", state.next_req()])

yield from update_ui(chatbot=chatbot, history=history)

return

- image_path = state.req[0]

- resolution = state.req[1]

- prompt = state.req[2]

+ image_path = state.req[0]['value']

+ resolution = state.req[1]['value']

+ prompt = state.req[2]['value']

chatbot.append(["图片修改, 执行中", f"图片:`{image_path}`

分辨率:`{resolution}`

修改需求:`{prompt}`"])

yield from update_ui(chatbot=chatbot, history=history)

-

image_url, image_path = edit_image(llm_kwargs, prompt, image_path, resolution)

- chatbot.append([state.prompt,

+ chatbot.append([prompt,

f'图像中转网址:

`{image_url}`

'+

f'中转网址预览:

`{image_path}`

'+

f'本地文件预览:

From 6d2f1262533129770e9e9c78134fb9d2f739e3cc Mon Sep 17 00:00:00 2001

From: binary-husky

Date: Mon, 4 Dec 2023 10:53:07 +0800

Subject: [PATCH 31/32] recv requirements.txt

---

requirements.txt | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/requirements.txt b/requirements.txt

index 94fac531..a5782f77 100644

--- a/requirements.txt

+++ b/requirements.txt

@@ -4,7 +4,7 @@ tiktoken>=0.3.3

requests[socks]

pydantic==1.10.11

transformers>=4.27.1

-scipdf_parser

+scipdf_parser>=0.52

python-markdown-math

websocket-client

beautifulsoup4

From b0c627909a386051a4e2abe98b17788ddb7d5587 Mon Sep 17 00:00:00 2001

From: Alpha <1526147838@qq.com>

Date: Mon, 4 Dec 2023 12:51:41 +0800

Subject: [PATCH 32/32] =?UTF-8?q?=E6=9B=B4=E6=94=B9=E4=BA=86=E4=B8=80?=

=?UTF-8?q?=E4=BA=9B=E6=B3=A8=E9=87=8A?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

---

config.py | 2 ++

request_llms/bridge_qwen.py | 2 +-

2 files changed, 3 insertions(+), 1 deletion(-)

diff --git a/config.py b/config.py

index 44e9f079..a5117245 100644

--- a/config.py

+++ b/config.py

@@ -101,6 +101,8 @@ MULTI_QUERY_LLM_MODELS = "gpt-3.5-turbo&chatglm3"

# 选择本地模型变体(只有当AVAIL_LLM_MODELS包含了对应本地模型时,才会起作用)

+# 如果你选择Qwen系列的模型,那么请在下面的QWEN_MODEL_SELECTION中指定具体的模型

+# 也可以是具体的模型路径

QWEN_MODEL_SELECTION = "Qwen/Qwen-1_8B-Chat-Int8"

diff --git a/request_llms/bridge_qwen.py b/request_llms/bridge_qwen.py

index 1bd846be..940c41d5 100644

--- a/request_llms/bridge_qwen.py

+++ b/request_llms/bridge_qwen.py

@@ -22,7 +22,7 @@ class GetQwenLMHandle(LocalLLMHandle):

from transformers import AutoModelForCausalLM, AutoTokenizer

from transformers.generation import GenerationConfig

with ProxyNetworkActivate('Download_LLM'):

- model_id = get_conf('QWEN_MODEL_SELECTION') #在这里更改路径,如果你已经下载好了的话,同时,别忘记tokenizer

+ model_id = get_conf('QWEN_MODEL_SELECTION')

self._tokenizer = AutoTokenizer.from_pretrained(model_id, trust_remote_code=True, resume_download=True)

# use fp16

model = AutoModelForCausalLM.from_pretrained(model_id, device_map="auto", trust_remote_code=True).eval()

-- 如果输出包含公式,会同时以tex形式和渲染形式显示,方便复制和阅读

+- 如果输出包含公式,会以tex形式和渲染形式同时显示,方便复制和阅读

-- 如果输出包含公式,会同时以tex形式和渲染形式显示,方便复制和阅读

+- 如果输出包含公式,会以tex形式和渲染形式同时显示,方便复制和阅读

-- 懒得看项目代码?整个工程直接给chatgpt炫嘴里

+- 懒得看项目代码?直接把整个工程炫ChatGPT嘴里

-- 懒得看项目代码?整个工程直接给chatgpt炫嘴里

+- 懒得看项目代码?直接把整个工程炫ChatGPT嘴里

@@ -208,7 +201,7 @@ AVAIL_LLM_MODELS = ["gpt-3.5-turbo", "api2d-gpt-3.5-turbo", "gpt-4", "api2d-gpt-

P.S. 如果需要依赖Latex的插件功能,请见Wiki。另外,您也可以直接使用方案4或者方案0获取Latex功能。

-2. ChatGPT + ChatGLM2 + MOSS + LLAMA2 + 通义千问(需要熟悉[Nvidia Docker](https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html#installing-on-ubuntu-and-debian)运行)

+2. ChatGPT + ChatGLM2 + MOSS + LLAMA2 + 通义千问(需要熟悉[Nvidia Docker](https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html#installing-on-ubuntu-and-debian)运行时)

[](https://github.com/binary-husky/gpt_academic/actions/workflows/build-with-chatglm.yml)

``` sh

@@ -254,7 +247,6 @@ P.S. 如果需要依赖Latex的插件功能,请见Wiki。另外,您也可以

### II:自定义函数插件

编写强大的函数插件来执行任何你想得到的和想不到的任务。

-

本项目的插件编写、调试难度很低,只要您具备一定的python基础知识,就可以仿照我们提供的模板实现自己的插件功能。

详情请参考[函数插件指南](https://github.com/binary-husky/gpt_academic/wiki/%E5%87%BD%E6%95%B0%E6%8F%92%E4%BB%B6%E6%8C%87%E5%8D%97)。

@@ -357,7 +349,7 @@ GPT Academic开发者QQ群:`610599535`

- 已知问题

- 某些浏览器翻译插件干扰此软件前端的运行

- - 官方Gradio目前有很多兼容性Bug,请**务必使用`requirement.txt`安装Gradio**

+ - 官方Gradio目前有很多兼容性问题,请**务必使用`requirement.txt`安装Gradio**

### III:主题

可以通过修改`THEME`选项(config.py)变更主题

diff --git a/version b/version

index 5f6de09c..cb4df5ae 100644

--- a/version

+++ b/version

@@ -1,5 +1,5 @@

{

- "version": 3.61,

+ "version": 3.62,

"show_feature": true,

- "new_feature": "修复潜在的多用户冲突问题 <-> 接入Deepseek Coder <-> AutoGen多智能体插件测试版 <-> 修复本地模型在Windows下的加载BUG <-> 支持文心一言v4和星火v3 <-> 支持GLM3和智谱的API <-> 解决本地模型并发BUG <-> 支持动态追加基础功能按钮"

+ "new_feature": "修复若干隐蔽的内存BUG <-> 修复多用户冲突问题 <-> 接入Deepseek Coder <-> AutoGen多智能体插件测试版 <-> 修复本地模型在Windows下的加载BUG <-> 支持文心一言v4和星火v3 <-> 支持GLM3和智谱的API <-> 解决本地模型并发BUG <-> 支持动态追加基础功能按钮"

}

From a64d5500450d0bad901f26e4493320d397fb9915 Mon Sep 17 00:00:00 2001

From: binary-husky

@@ -208,7 +201,7 @@ AVAIL_LLM_MODELS = ["gpt-3.5-turbo", "api2d-gpt-3.5-turbo", "gpt-4", "api2d-gpt-

P.S. 如果需要依赖Latex的插件功能,请见Wiki。另外,您也可以直接使用方案4或者方案0获取Latex功能。

-2. ChatGPT + ChatGLM2 + MOSS + LLAMA2 + 通义千问(需要熟悉[Nvidia Docker](https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html#installing-on-ubuntu-and-debian)运行)

+2. ChatGPT + ChatGLM2 + MOSS + LLAMA2 + 通义千问(需要熟悉[Nvidia Docker](https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html#installing-on-ubuntu-and-debian)运行时)

[](https://github.com/binary-husky/gpt_academic/actions/workflows/build-with-chatglm.yml)

``` sh

@@ -254,7 +247,6 @@ P.S. 如果需要依赖Latex的插件功能,请见Wiki。另外,您也可以

### II:自定义函数插件

编写强大的函数插件来执行任何你想得到的和想不到的任务。

-

本项目的插件编写、调试难度很低,只要您具备一定的python基础知识,就可以仿照我们提供的模板实现自己的插件功能。

详情请参考[函数插件指南](https://github.com/binary-husky/gpt_academic/wiki/%E5%87%BD%E6%95%B0%E6%8F%92%E4%BB%B6%E6%8C%87%E5%8D%97)。

@@ -357,7 +349,7 @@ GPT Academic开发者QQ群:`610599535`

- 已知问题

- 某些浏览器翻译插件干扰此软件前端的运行

- - 官方Gradio目前有很多兼容性Bug,请**务必使用`requirement.txt`安装Gradio**

+ - 官方Gradio目前有很多兼容性问题,请**务必使用`requirement.txt`安装Gradio**

### III:主题

可以通过修改`THEME`选项(config.py)变更主题

diff --git a/version b/version

index 5f6de09c..cb4df5ae 100644

--- a/version

+++ b/version

@@ -1,5 +1,5 @@

{

- "version": 3.61,

+ "version": 3.62,

"show_feature": true,

- "new_feature": "修复潜在的多用户冲突问题 <-> 接入Deepseek Coder <-> AutoGen多智能体插件测试版 <-> 修复本地模型在Windows下的加载BUG <-> 支持文心一言v4和星火v3 <-> 支持GLM3和智谱的API <-> 解决本地模型并发BUG <-> 支持动态追加基础功能按钮"

+ "new_feature": "修复若干隐蔽的内存BUG <-> 修复多用户冲突问题 <-> 接入Deepseek Coder <-> AutoGen多智能体插件测试版 <-> 修复本地模型在Windows下的加载BUG <-> 支持文心一言v4和星火v3 <-> 支持GLM3和智谱的API <-> 解决本地模型并发BUG <-> 支持动态追加基础功能按钮"

}

From a64d5500450d0bad901f26e4493320d397fb9915 Mon Sep 17 00:00:00 2001

From: binary-husky

-- 如果输出包含公式,会以tex形式和渲染形式同时显示,方便复制和阅读

+- 如果输出包含公式,会以 tex 形式和渲染形式同时显示,方便复制和阅读

-- 如果输出包含公式,会以tex形式和渲染形式同时显示,方便复制和阅读

+- 如果输出包含公式,会以 tex 形式和渲染形式同时显示,方便复制和阅读