镜像自地址

https://github.com/binary-husky/gpt_academic.git

已同步 2025-12-06 06:26:47 +00:00

Merge branch 'master' into frontier

这个提交包含在:

@@ -1,5 +1,5 @@

|

||||

> [!IMPORTANT]

|

||||

> `master主分支`最新动态(2025.2.4): 增加deepseek-r1支持

|

||||

> `master主分支`最新动态(2025.2.13): 联网组件支持Jina的api / 增加deepseek-r1支持

|

||||

> `frontier开发分支`最新动态(2024.12.9): 更新对话时间线功能,优化xelatex论文翻译

|

||||

> `wiki文档`最新动态(2024.12.5): 更新ollama接入指南

|

||||

>

|

||||

|

||||

@@ -81,7 +81,7 @@ API_URL_REDIRECT = {}

|

||||

|

||||

# 多线程函数插件中,默认允许多少路线程同时访问OpenAI。Free trial users的限制是每分钟3次,Pay-as-you-go users的限制是每分钟3500次

|

||||

# 一言以蔽之:免费(5刀)用户填3,OpenAI绑了信用卡的用户可以填 16 或者更高。提高限制请查询:https://platform.openai.com/docs/guides/rate-limits/overview

|

||||

DEFAULT_WORKER_NUM = 3

|

||||

DEFAULT_WORKER_NUM = 8

|

||||

|

||||

|

||||

# 色彩主题, 可选 ["Default", "Chuanhu-Small-and-Beautiful", "High-Contrast"]

|

||||

@@ -103,6 +103,7 @@ AVAIL_FONTS = [

|

||||

"华文中宋(STZhongsong)",

|

||||

"华文新魏(STXinwei)",

|

||||

"华文隶书(STLiti)",

|

||||

# 备注:以下字体需要网络支持,您可以自定义任意您喜欢的字体,如下所示,需要满足的格式为 "字体昵称(字体英文真名@字体css下载链接)"

|

||||

"思源宋体(Source Han Serif CN VF@https://chinese-fonts-cdn.deno.dev/packages/syst/dist/SourceHanSerifCN/result.css)",

|

||||

"月星楷(Moon Stars Kai HW@https://chinese-fonts-cdn.deno.dev/packages/moon-stars-kai/dist/MoonStarsKaiHW-Regular/result.css)",

|

||||

"珠圆体(MaokenZhuyuanTi@https://chinese-fonts-cdn.deno.dev/packages/mkzyt/dist/猫啃珠圆体/result.css)",

|

||||

@@ -343,6 +344,8 @@ NUM_CUSTOM_BASIC_BTN = 4

|

||||

DAAS_SERVER_URLS = [ f"https://niuziniu-biligpt{i}.hf.space/stream" for i in range(1,5) ]

|

||||

|

||||

|

||||

# 在互联网搜索组件中,负责将搜索结果整理成干净的Markdown

|

||||

JINA_API_KEY = ""

|

||||

|

||||

"""

|

||||

--------------- 配置关联关系说明 ---------------

|

||||

|

||||

@@ -434,36 +434,6 @@ def get_crazy_functions():

|

||||

logger.error(trimmed_format_exc())

|

||||

logger.error("Load function plugin failed")

|

||||

|

||||

# try:

|

||||

# from crazy_functions.联网的ChatGPT import 连接网络回答问题

|

||||

|

||||

# function_plugins.update(

|

||||

# {

|

||||

# "连接网络回答问题(输入问题后点击该插件,需要访问谷歌)": {

|

||||

# "Group": "对话",

|

||||

# "Color": "stop",

|

||||

# "AsButton": False, # 加入下拉菜单中

|

||||

# # "Info": "连接网络回答问题(需要访问谷歌)| 输入参数是一个问题",

|

||||

# "Function": HotReload(连接网络回答问题),

|

||||

# }

|

||||

# }

|

||||

# )

|

||||

# from crazy_functions.联网的ChatGPT_bing版 import 连接bing搜索回答问题

|

||||

|

||||

# function_plugins.update(

|

||||

# {

|

||||

# "连接网络回答问题(中文Bing版,输入问题后点击该插件)": {

|

||||

# "Group": "对话",

|

||||

# "Color": "stop",

|

||||

# "AsButton": False, # 加入下拉菜单中

|

||||

# "Info": "连接网络回答问题(需要访问中文Bing)| 输入参数是一个问题",

|

||||

# "Function": HotReload(连接bing搜索回答问题),

|

||||

# }

|

||||

# }

|

||||

# )

|

||||

# except:

|

||||

# logger.error(trimmed_format_exc())

|

||||

# logger.error("Load function plugin failed")

|

||||

|

||||

try:

|

||||

from crazy_functions.SourceCode_Analyse import 解析任意code项目

|

||||

@@ -771,6 +741,9 @@ def get_multiplex_button_functions():

|

||||

"常规对话":

|

||||

"",

|

||||

|

||||

"查互联网后回答":

|

||||

"查互联网后回答",

|

||||

|

||||

"多模型对话":

|

||||

"询问多个GPT模型", # 映射到上面的 `询问多个GPT模型` 插件

|

||||

|

||||

|

||||

@@ -175,10 +175,17 @@ def scrape_text(url, proxies) -> str:

|

||||

Returns:

|

||||

str: The scraped text

|

||||

"""

|

||||

from loguru import logger

|

||||

headers = {

|

||||

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.61 Safari/537.36',

|

||||

'Content-Type': 'text/plain',

|

||||

}

|

||||

|

||||

# 首先采用Jina进行文本提取

|

||||

if get_conf("JINA_API_KEY"):

|

||||

try: return jina_scrape_text(url)

|

||||

except: logger.debug("Jina API 请求失败,回到旧方法")

|

||||

|

||||

try:

|

||||

response = requests.get(url, headers=headers, proxies=proxies, timeout=8)

|

||||

if response.encoding == "ISO-8859-1": response.encoding = response.apparent_encoding

|

||||

@@ -193,6 +200,24 @@ def scrape_text(url, proxies) -> str:

|

||||

text = "\n".join(chunk for chunk in chunks if chunk)

|

||||

return text

|

||||

|

||||

|

||||

def jina_scrape_text(url) -> str:

|

||||

"jina_39727421c8fa4e4fa9bd698e5211feaaDyGeVFESNrRaepWiLT0wmHYJSh-d"

|

||||

headers = {

|

||||

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.61 Safari/537.36',

|

||||

'Content-Type': 'text/plain',

|

||||

"X-Retain-Images": "none",

|

||||

"Authorization": f'Bearer {get_conf("JINA_API_KEY")}'

|

||||

}

|

||||

response = requests.get("https://r.jina.ai/" + url, headers=headers, proxies=None, timeout=8)

|

||||

if response.status_code != 200:

|

||||

raise ValueError("Jina API 请求失败,开始尝试旧方法!" + response.text)

|

||||

if response.encoding == "ISO-8859-1": response.encoding = response.apparent_encoding

|

||||

result = response.text

|

||||

result = result.replace("\\[", "[").replace("\\]", "]").replace("\\(", "(").replace("\\)", ")")

|

||||

return response.text

|

||||

|

||||

|

||||

def internet_search_with_analysis_prompt(prompt, analysis_prompt, llm_kwargs, chatbot):

|

||||

from toolbox import get_conf

|

||||

proxies = get_conf('proxies')

|

||||

@@ -246,23 +271,52 @@ def 连接网络回答问题(txt, llm_kwargs, plugin_kwargs, chatbot, history, s

|

||||

urls = search_optimizer(txt, proxies, optimizer_history, llm_kwargs, optimizer, categories, searxng_url, engines)

|

||||

history = []

|

||||

if len(urls) == 0:

|

||||

chatbot.append((f"结论:{txt}",

|

||||

"[Local Message] 受到限制,无法从searxng获取信息!请尝试更换搜索引擎。"))

|

||||

chatbot.append((f"结论:{txt}", "[Local Message] 受到限制,无法从searxng获取信息!请尝试更换搜索引擎。"))

|

||||

yield from update_ui(chatbot=chatbot, history=history) # 刷新界面

|

||||

return

|

||||

|

||||

# ------------- < 第2步:依次访问网页 > -------------

|

||||

from concurrent.futures import ThreadPoolExecutor

|

||||

from textwrap import dedent

|

||||

max_search_result = 5 # 最多收纳多少个网页的结果

|

||||

if optimizer == "开启(增强)":

|

||||

max_search_result = 8

|

||||

chatbot.append(["联网检索中 ...", None])

|

||||

template = dedent("""

|

||||

<details>

|

||||

<summary>{TITLE}</summary>

|

||||

<div class="search_result">{URL}</div>

|

||||

<div class="search_result">{CONTENT}</div>

|

||||

</details>

|

||||

""")

|

||||

|

||||

buffer = ""

|

||||

|

||||

# 创建线程池

|

||||

with ThreadPoolExecutor(max_workers=5) as executor:

|

||||

# 提交任务到线程池

|

||||

futures = []

|

||||

for index, url in enumerate(urls[:max_search_result]):

|

||||

res = scrape_text(url['link'], proxies)

|

||||

prefix = f"第{index}份搜索结果 [源自{url['source'][0]}搜索] ({url['title'][:25]}):"

|

||||

future = executor.submit(scrape_text, url['link'], proxies)

|

||||

futures.append((index, future, url))

|

||||

|

||||

# 处理完成的任务

|

||||

for index, future, url in futures:

|

||||

# 开始

|

||||

prefix = f"正在加载 第{index+1}份搜索结果 [源自{url['source'][0]}搜索] ({url['title'][:25]}):"

|

||||

string_structure = template.format(TITLE=prefix, URL=url['link'], CONTENT="正在加载,请稍后 ......")

|

||||

yield from update_ui_lastest_msg(lastmsg=(buffer + string_structure), chatbot=chatbot, history=history, delay=0.1) # 刷新界面

|

||||

|

||||

# 获取结果

|

||||

res = future.result()

|

||||

|

||||

# 显示结果

|

||||

prefix = f"第{index+1}份搜索结果 [源自{url['source'][0]}搜索] ({url['title'][:25]}):"

|

||||

string_structure = template.format(TITLE=prefix, URL=url['link'], CONTENT=res[:1000] + "......")

|

||||

buffer += string_structure

|

||||

|

||||

# 更新历史

|

||||

history.extend([prefix, res])

|

||||

res_squeeze = res.replace('\n', '...')

|

||||

chatbot[-1] = [prefix + "\n\n" + res_squeeze[:500] + "......", None]

|

||||

yield from update_ui(chatbot=chatbot, history=history) # 刷新界面

|

||||

yield from update_ui_lastest_msg(lastmsg=buffer, chatbot=chatbot, history=history, delay=0.1) # 刷新界面

|

||||

|

||||

# ------------- < 第3步:ChatGPT综合 > -------------

|

||||

if (optimizer != "开启(增强)"):

|

||||

|

||||

@@ -38,11 +38,12 @@ class NetworkGPT_Wrap(GptAcademicPluginTemplate):

|

||||

}

|

||||

return gui_definition

|

||||

|

||||

def execute(txt, llm_kwargs, plugin_kwargs, chatbot, history, system_prompt, user_request):

|

||||

def execute(txt, llm_kwargs, plugin_kwargs:dict, chatbot, history, system_prompt, user_request):

|

||||

"""

|

||||

执行插件

|

||||

"""

|

||||

if plugin_kwargs["categories"] == "网页": plugin_kwargs["categories"] = "general"

|

||||

if plugin_kwargs["categories"] == "学术论文": plugin_kwargs["categories"] = "science"

|

||||

if plugin_kwargs.get("categories", None) == "网页": plugin_kwargs["categories"] = "general"

|

||||

elif plugin_kwargs.get("categories", None) == "学术论文": plugin_kwargs["categories"] = "science"

|

||||

else: plugin_kwargs["categories"] = "general"

|

||||

yield from 连接网络回答问题(txt, llm_kwargs, plugin_kwargs, chatbot, history, system_prompt, user_request)

|

||||

|

||||

|

||||

@@ -1072,7 +1072,7 @@ if "zhipuai" in AVAIL_LLM_MODELS: # zhipuai 是glm-4的别名,向后兼容

|

||||

})

|

||||

except:

|

||||

logger.error(trimmed_format_exc())

|

||||

# -=-=-=-=-=-=- 幻方-深度求索大模型 -=-=-=-=-=-=-

|

||||

# -=-=-=-=-=-=- 幻方-深度求索本地大模型 -=-=-=-=-=-=-

|

||||

if "deepseekcoder" in AVAIL_LLM_MODELS: # deepseekcoder

|

||||

try:

|

||||

from .bridge_deepseekcoder import predict_no_ui_long_connection as deepseekcoder_noui

|

||||

|

||||

@@ -6,7 +6,6 @@ from toolbox import get_conf

|

||||

from request_llms.local_llm_class import LocalLLMHandle, get_local_llm_predict_fns

|

||||

from threading import Thread

|

||||

from loguru import logger

|

||||

import torch

|

||||

import os

|

||||

|

||||

def download_huggingface_model(model_name, max_retry, local_dir):

|

||||

@@ -29,6 +28,7 @@ class GetCoderLMHandle(LocalLLMHandle):

|

||||

self.cmd_to_install = cmd_to_install

|

||||

|

||||

def load_model_and_tokenizer(self):

|

||||

import torch

|

||||

# 🏃♂️🏃♂️🏃♂️ 子进程执行

|

||||

with ProxyNetworkActivate('Download_LLM'):

|

||||

from transformers import AutoTokenizer, AutoModelForCausalLM, TextIteratorStreamer

|

||||

|

||||

@@ -512,7 +512,7 @@ def generate_payload(inputs:str, llm_kwargs:dict, history:list, system_prompt:st

|

||||

model, _ = read_one_api_model_name(model)

|

||||

if llm_kwargs['llm_model'].startswith('openrouter-'):

|

||||

model = llm_kwargs['llm_model'][len('openrouter-'):]

|

||||

model= read_one_api_model_name(model)

|

||||

model, _= read_one_api_model_name(model)

|

||||

if model == "gpt-3.5-random": # 随机选择, 绕过openai访问频率限制

|

||||

model = random.choice([

|

||||

"gpt-3.5-turbo",

|

||||

|

||||

@@ -2,15 +2,9 @@ import json

|

||||

import time

|

||||

import traceback

|

||||

import requests

|

||||

from loguru import logger

|

||||

|

||||

# config_private.py放自己的秘密如API和代理网址

|

||||

# 读取时首先看是否存在私密的config_private配置文件(不受git管控),如果有,则覆盖原config文件

|

||||

from toolbox import (

|

||||

get_conf,

|

||||

update_ui,

|

||||

is_the_upload_folder,

|

||||

)

|

||||

from loguru import logger

|

||||

from toolbox import get_conf, is_the_upload_folder, update_ui, update_ui_lastest_msg

|

||||

|

||||

proxies, TIMEOUT_SECONDS, MAX_RETRY = get_conf(

|

||||

"proxies", "TIMEOUT_SECONDS", "MAX_RETRY"

|

||||

@@ -39,27 +33,35 @@ def decode_chunk(chunk):

|

||||

用于解读"content"和"finish_reason"的内容(如果支持思维链也会返回"reasoning_content"内容)

|

||||

"""

|

||||

chunk = chunk.decode()

|

||||

respose = ""

|

||||

response = ""

|

||||

reasoning_content = ""

|

||||

finish_reason = "False"

|

||||

|

||||

# 考虑返回类型是 text/json 和 text/event-stream 两种

|

||||

if chunk.startswith("data: "):

|

||||

chunk = chunk[6:]

|

||||

else:

|

||||

chunk = chunk

|

||||

|

||||

try:

|

||||

chunk = json.loads(chunk[6:])

|

||||

chunk = json.loads(chunk)

|

||||

except:

|

||||

respose = ""

|

||||

response = ""

|

||||

finish_reason = chunk

|

||||

|

||||

# 错误处理部分

|

||||

if "error" in chunk:

|

||||

respose = "API_ERROR"

|

||||

response = "API_ERROR"

|

||||

try:

|

||||

chunk = json.loads(chunk)

|

||||

finish_reason = chunk["error"]["code"]

|

||||

except:

|

||||

finish_reason = "API_ERROR"

|

||||

return respose, finish_reason

|

||||

return response, reasoning_content, finish_reason

|

||||

|

||||

try:

|

||||

if chunk["choices"][0]["delta"]["content"] is not None:

|

||||

respose = chunk["choices"][0]["delta"]["content"]

|

||||

response = chunk["choices"][0]["delta"]["content"]

|

||||

except:

|

||||

pass

|

||||

try:

|

||||

@@ -71,7 +73,7 @@ def decode_chunk(chunk):

|

||||

finish_reason = chunk["choices"][0]["finish_reason"]

|

||||

except:

|

||||

pass

|

||||

return respose, reasoning_content, finish_reason

|

||||

return response, reasoning_content, finish_reason, str(chunk)

|

||||

|

||||

|

||||

def generate_message(input, model, key, history, max_output_token, system_prompt, temperature):

|

||||

@@ -106,7 +108,7 @@ def generate_message(input, model, key, history, max_output_token, system_prompt

|

||||

what_i_ask_now["role"] = "user"

|

||||

what_i_ask_now["content"] = input

|

||||

messages.append(what_i_ask_now)

|

||||

playload = {

|

||||

payload = {

|

||||

"model": model,

|

||||

"messages": messages,

|

||||

"temperature": temperature,

|

||||

@@ -114,7 +116,7 @@ def generate_message(input, model, key, history, max_output_token, system_prompt

|

||||

"max_tokens": max_output_token,

|

||||

}

|

||||

|

||||

return headers, playload

|

||||

return headers, payload

|

||||

|

||||

|

||||

def get_predict_function(

|

||||

@@ -141,7 +143,7 @@ def get_predict_function(

|

||||

history=[],

|

||||

sys_prompt="",

|

||||

observe_window=None,

|

||||

console_slience=False,

|

||||

console_silence=False,

|

||||

):

|

||||

"""

|

||||

发送至chatGPT,等待回复,一次性完成,不显示中间过程。但内部用stream的方法避免中途网线被掐。

|

||||

@@ -157,12 +159,12 @@ def get_predict_function(

|

||||

用于负责跨越线程传递已经输出的部分,大部分时候仅仅为了fancy的视觉效果,留空即可。observe_window[0]:观测窗。observe_window[1]:看门狗

|

||||

"""

|

||||

from .bridge_all import model_info

|

||||

watch_dog_patience = 5 # 看门狗的耐心,设置5秒不准咬人(咬的也不是人

|

||||

watch_dog_patience = 5 # 看门狗的耐心,设置5秒不准咬人 (咬的也不是人)

|

||||

if len(APIKEY) == 0:

|

||||

raise RuntimeError(f"APIKEY为空,请检查配置文件的{APIKEY}")

|

||||

if inputs == "":

|

||||

inputs = "你好👋"

|

||||

headers, playload = generate_message(

|

||||

headers, payload = generate_message(

|

||||

input=inputs,

|

||||

model=llm_kwargs["llm_model"],

|

||||

key=APIKEY,

|

||||

@@ -182,7 +184,7 @@ def get_predict_function(

|

||||

endpoint,

|

||||

headers=headers,

|

||||

proxies=None if disable_proxy else proxies,

|

||||

json=playload,

|

||||

json=payload,

|

||||

stream=True,

|

||||

timeout=TIMEOUT_SECONDS,

|

||||

)

|

||||

@@ -198,7 +200,7 @@ def get_predict_function(

|

||||

result = ""

|

||||

finish_reason = ""

|

||||

if reasoning:

|

||||

resoning_buffer = ""

|

||||

reasoning_buffer = ""

|

||||

|

||||

stream_response = response.iter_lines()

|

||||

while True:

|

||||

@@ -210,7 +212,7 @@ def get_predict_function(

|

||||

break

|

||||

except requests.exceptions.ConnectionError:

|

||||

chunk = next(stream_response) # 失败了,重试一次?再失败就没办法了。

|

||||

response_text, reasoning_content, finish_reason = decode_chunk(chunk)

|

||||

response_text, reasoning_content, finish_reason, decoded_chunk = decode_chunk(chunk)

|

||||

# 返回的数据流第一次为空,继续等待

|

||||

if response_text == "" and (reasoning == False or reasoning_content == "") and finish_reason != "False":

|

||||

continue

|

||||

@@ -226,12 +228,12 @@ def get_predict_function(

|

||||

if chunk:

|

||||

try:

|

||||

if finish_reason == "stop":

|

||||

if not console_slience:

|

||||

if not console_silence:

|

||||

print(f"[response] {result}")

|

||||

break

|

||||

result += response_text

|

||||

if reasoning:

|

||||

resoning_buffer += reasoning_content

|

||||

reasoning_buffer += reasoning_content

|

||||

if observe_window is not None:

|

||||

# 观测窗,把已经获取的数据显示出去

|

||||

if len(observe_window) >= 1:

|

||||

@@ -247,9 +249,8 @@ def get_predict_function(

|

||||

logger.error(error_msg)

|

||||

raise RuntimeError("Json解析不合常规")

|

||||

if reasoning:

|

||||

# reasoning 的部分加上框 (>)

|

||||

return '\n'.join(map(lambda x: '> ' + x, resoning_buffer.split('\n'))) + \

|

||||

'\n\n' + result

|

||||

paragraphs = ''.join([f'<p style="margin: 1.25em 0;">{line}</p>' for line in reasoning_buffer.split('\n')])

|

||||

return f'''<div class="reasoning_process" >{paragraphs}</div>\n\n''' + result

|

||||

return result

|

||||

|

||||

def predict(

|

||||

@@ -268,7 +269,7 @@ def get_predict_function(

|

||||

inputs 是本次问询的输入

|

||||

top_p, temperature是chatGPT的内部调优参数

|

||||

history 是之前的对话列表(注意无论是inputs还是history,内容太长了都会触发token数量溢出的错误)

|

||||

chatbot 为WebUI中显示的对话列表,修改它,然后yeild出去,可以直接修改对话界面内容

|

||||

chatbot 为WebUI中显示的对话列表,修改它,然后yield出去,可以直接修改对话界面内容

|

||||

additional_fn代表点击的哪个按钮,按钮见functional.py

|

||||

"""

|

||||

from .bridge_all import model_info

|

||||

@@ -299,7 +300,7 @@ def get_predict_function(

|

||||

) # 刷新界面

|

||||

time.sleep(2)

|

||||

|

||||

headers, playload = generate_message(

|

||||

headers, payload = generate_message(

|

||||

input=inputs,

|

||||

model=llm_kwargs["llm_model"],

|

||||

key=APIKEY,

|

||||

@@ -321,7 +322,7 @@ def get_predict_function(

|

||||

endpoint,

|

||||

headers=headers,

|

||||

proxies=None if disable_proxy else proxies,

|

||||

json=playload,

|

||||

json=payload,

|

||||

stream=True,

|

||||

timeout=TIMEOUT_SECONDS,

|

||||

)

|

||||

@@ -343,14 +344,21 @@ def get_predict_function(

|

||||

gpt_reasoning_buffer = ""

|

||||

|

||||

stream_response = response.iter_lines()

|

||||

wait_counter = 0

|

||||

while True:

|

||||

try:

|

||||

chunk = next(stream_response)

|

||||

except StopIteration:

|

||||

if wait_counter != 0 and gpt_replying_buffer == "":

|

||||

yield from update_ui_lastest_msg(lastmsg="模型调用失败 ...", chatbot=chatbot, history=history, msg="failed")

|

||||

break

|

||||

except requests.exceptions.ConnectionError:

|

||||

chunk = next(stream_response) # 失败了,重试一次?再失败就没办法了。

|

||||

response_text, reasoning_content, finish_reason = decode_chunk(chunk)

|

||||

response_text, reasoning_content, finish_reason, decoded_chunk = decode_chunk(chunk)

|

||||

if decoded_chunk == ': keep-alive':

|

||||

wait_counter += 1

|

||||

yield from update_ui_lastest_msg(lastmsg="等待中 " + "".join(["."] * (wait_counter%10)), chatbot=chatbot, history=history, msg="waiting ...")

|

||||

continue

|

||||

# 返回的数据流第一次为空,继续等待

|

||||

if response_text == "" and (reasoning == False or reasoning_content == "") and finish_reason != "False":

|

||||

status_text = f"finish_reason: {finish_reason}"

|

||||

@@ -367,7 +375,7 @@ def get_predict_function(

|

||||

chunk_decoded = chunk.decode()

|

||||

chatbot[-1] = (

|

||||

chatbot[-1][0],

|

||||

"[Local Message] {finish_reason},获得以下报错信息:\n"

|

||||

f"[Local Message] {finish_reason}, 获得以下报错信息:\n"

|

||||

+ chunk_decoded,

|

||||

)

|

||||

yield from update_ui(

|

||||

@@ -385,7 +393,8 @@ def get_predict_function(

|

||||

if reasoning:

|

||||

gpt_replying_buffer += response_text

|

||||

gpt_reasoning_buffer += reasoning_content

|

||||

history[-1] = '\n'.join(map(lambda x: '> ' + x, gpt_reasoning_buffer.split('\n'))) + '\n\n' + gpt_replying_buffer

|

||||

paragraphs = ''.join([f'<p style="margin: 1.25em 0;">{line}</p>' for line in gpt_reasoning_buffer.split('\n')])

|

||||

history[-1] = f'<div class="reasoning_process">{paragraphs}</div>\n\n---\n\n' + gpt_replying_buffer

|

||||

else:

|

||||

gpt_replying_buffer += response_text

|

||||

# 如果这里抛出异常,一般是文本过长,详情见get_full_error的输出

|

||||

|

||||

@@ -111,6 +111,8 @@ def extract_archive(file_path, dest_dir):

|

||||

member_path = os.path.normpath(member.name)

|

||||

full_path = os.path.join(dest_dir, member_path)

|

||||

full_path = os.path.abspath(full_path)

|

||||

if member.islnk() or member.issym():

|

||||

raise Exception(f"Attempted Symlink in {member.name}")

|

||||

if not full_path.startswith(os.path.abspath(dest_dir) + os.sep):

|

||||

raise Exception(f"Attempted Path Traversal in {member.name}")

|

||||

|

||||

|

||||

@@ -45,28 +45,161 @@ Any folded content here. It requires an empty line just above it.

|

||||

|

||||

md ="""

|

||||

|

||||

在这种场景中,您希望机器 B 能够通过轮询机制来间接地“请求”机器 A,而实际上机器 A 只能主动向机器 B 发出请求。这是一种典型的客户端-服务器轮询模式。下面是如何实现这种机制的详细步骤:

|

||||

<details>

|

||||

<summary>第0份搜索结果 [源自google搜索] (汤姆·赫兰德):</summary>

|

||||

<div class="search_result">https://baike.baidu.com/item/%E6%B1%A4%E5%A7%86%C2%B7%E8%B5%AB%E5%85%B0%E5%BE%B7/3687216</div>

|

||||

<div class="search_result">Title: 汤姆·赫兰德

|

||||

|

||||

### 机器 B 的实现

|

||||

URL Source: https://baike.baidu.com/item/%E6%B1%A4%E5%A7%86%C2%B7%E8%B5%AB%E5%85%B0%E5%BE%B7/3687216

|

||||

|

||||

1. **安装 FastAPI 和必要的依赖库**:

|

||||

```bash

|

||||

pip install fastapi uvicorn

|

||||

```

|

||||

Markdown Content:

|

||||

网页新闻贴吧知道网盘图片视频地图文库资讯采购百科

|

||||

百度首页

|

||||

登录

|

||||

注册

|

||||

进入词条

|

||||

全站搜索

|

||||

帮助

|

||||

首页

|

||||

秒懂百科

|

||||

特色百科

|

||||

知识专题

|

||||

加入百科

|

||||

百科团队

|

||||

权威合作

|

||||

个人中心

|

||||

汤姆·赫兰德

|

||||

播报

|

||||

讨论

|

||||

上传视频

|

||||

英国男演员

|

||||

汤姆·赫兰德(Tom Holland),1996年6月1日出生于英国英格兰泰晤士河畔金斯顿,英国男演员。2008年,出演音乐剧《跳出我天地》而崭露头角。2010年,作为主演参加音乐剧《跳出我天地》的五周年特别演出。2012年10月11日,主演的个人首部电影《海啸奇迹》上映,并凭该电影获得第84届美国国家评论协会奖最具突破男演员奖。2016年10月15日,与查理·汉纳姆、西耶娜·米勒合作出演的电影《 ... >>>

|

||||

|

||||

2. **创建 FastAPI 服务**:

|

||||

```python

|

||||

from fastapi import FastAPI

|

||||

from fastapi.responses import JSONResponse

|

||||

from uuid import uuid4

|

||||

from threading import Lock

|

||||

import time

|

||||

目录

|

||||

1早年经历

|

||||

2演艺经历

|

||||

▪影坛新星

|

||||

▪角色多变

|

||||

▪跨界翘楚

|

||||

3个人生活

|

||||

▪家庭

|

||||

▪恋情

|

||||

▪社交

|

||||

4主要作品

|

||||

▪参演电影

|

||||

▪参演电视剧

|

||||

▪配音作品

|

||||

▪导演作品

|

||||

▪杂志写真

|

||||

5社会活动

|

||||

6获奖记录

|

||||

7人物评价

|

||||

基本信息

|

||||

汤姆·赫兰德(Tom Holland),1996年6月1日出生于英国英格兰泰晤士河畔金斯顿,英国男演员。 [67]

|

||||

2008年,出演音乐剧《跳出我天地》而崭露头角 [1]。2010年,作为主演参加音乐剧《跳出我天地》的五周年特别演出 [2]。2012年10月11日,主演的个人首部电影《海啸奇迹》上映,并凭该电影获得第84届美国国家评论协会奖最具突破男演员奖 [3]。2016年10月15日,与查理·汉纳姆、西耶娜·米勒合作出演的电影《迷失Z城》在纽约电影节首映 [17];2017年,主演的《蜘蛛侠:英雄归来》上映,他凭该电影获得第19届青少年选择奖最佳暑期电影男演员奖,以及第70届英国电影和电视艺术学院奖最佳新星奖。 [72]2019年,主演的电影《蜘蛛侠:英雄远征》上映 [5];同年,凭借该电影获得第21届青少年选择奖最佳夏日电影男演员奖 [6]。2024年4月,汤姆·霍兰德主演的伦敦西区新版舞台剧《罗密欧与朱丽叶》公布演员名单。 [66]

|

||||

2024年,......</div>

|

||||

</details>

|

||||

|

||||

app = FastAPI()

|

||||

<details>

|

||||

<summary>第1份搜索结果 [源自google搜索] (汤姆·霍兰德):</summary>

|

||||

<div class="search_result">https://zh.wikipedia.org/zh-hans/%E6%B1%A4%E5%A7%86%C2%B7%E8%B5%AB%E5%85%B0%E5%BE%B7</div>

|

||||

<div class="search_result">Title: 汤姆·赫兰德

|

||||

|

||||

# 字典用于存储请求和状态

|

||||

requests = {}

|

||||

process_lock = Lock()

|

||||

URL Source: https://zh.wikipedia.org/zh-hans/%E6%B1%A4%E5%A7%86%C2%B7%E8%B5%AB%E5%85%B0%E5%BE%B7

|

||||

|

||||

Published Time: 2015-06-24T01:08:01Z

|

||||

|

||||

Markdown Content:

|

||||

| 汤姆·霍兰德

|

||||

Tom Holland |

|

||||

| --- |

|

||||

| [](https://zh.wikipedia.org/wiki/File:Tom_Holland_by_Gage_Skidmore.jpg)

|

||||

|

||||

2016年在[圣地牙哥国际漫画展](https://zh.wikipedia.org/wiki/%E8%81%96%E5%9C%B0%E7%89%99%E5%93%A5%E5%9C%8B%E9%9A%9B%E6%BC%AB%E7%95%AB%E5%B1%95 "圣地牙哥国际漫画展")的霍兰德

|

||||

|

||||

|

||||

|

||||

|

|

||||

| 男演员 |

|

||||

| 昵称 | 荷兰弟[\[1\]](https://zh.wikipedia.org/zh-hans/%E6%B1%A4%E5%A7%86%C2%B7%E8%B5%AB%E5%85%B0%E5%BE%B7#cite_note-1) |

|

||||

| 出生 | 汤玛斯·史丹利·霍兰德

|

||||

(Thomas Stanley Holland)[\[2\]](https://zh.wikipedia.org/zh-hans/%E6%B1%A4%E5%A7%86%C2%B7%E8%B5%AB%E5%85%B0%E5%BE%B7#cite_note-2)

|

||||

|

||||

|

||||

1996年6月1日(28岁)

|

||||

|

||||

英国[英格兰](https://zh.wikipedia.org/wiki/%E8%8B%B1%E6%A0%BC%E8%98%AD "英格兰")[泰晤士河畔金斯顿](https://zh.wikipedia.org/wiki/%E6%B3%B0%E6%99%A4......</div>

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>第2份搜索结果 [源自google搜索] (为什么汤姆赫兰德被称为荷兰弟?):</summary>

|

||||

<div class="search_result">https://www.zhihu.com/question/363988307</div>

|

||||

<div class="search_result">Title: 为什么汤姆赫兰德被称为荷兰弟? - 知乎

|

||||

|

||||

URL Source: https://www.zhihu.com/question/363988307

|

||||

|

||||

Markdown Content:

|

||||

要说漫威演员里面,谁是最牛的存在,不好说,各有各的看法,但要说谁是最能剧透的,毫无疑问,是我们的汤姆赫兰德荷兰弟,可以说,他算得上是把剧透给玩明白了,先后剧透了不少的电影桥段,以至于漫威后面像防贼一样防着人家荷兰弟,可大家知道吗?你永远想象不到荷兰弟的嘴巴到底有多能漏风?

|

||||

|

||||

|

||||

|

||||

故事要回到《侏罗纪世界2》的筹备期间,当时,荷兰弟也参与了面试,计划在剧中饰演一个角色,原本,这也没啥,这都是好莱坞的传统了,可是,当时的导演胡安根本不知道荷兰弟的“风光伟绩”,于是乎,人家便屁颠屁颠把侏罗纪世界2的资料拿过来给荷兰弟,虽然,后面没有让荷兰弟出演这部电影,但导演似乎忘了他的嘴巴是开过光的。

|

||||

|

||||

|

||||

|

||||

荷兰弟把剧情刻在了脑子

|

||||

......</div>

|

||||

</details>

|

||||

|

||||

<details>

|

||||

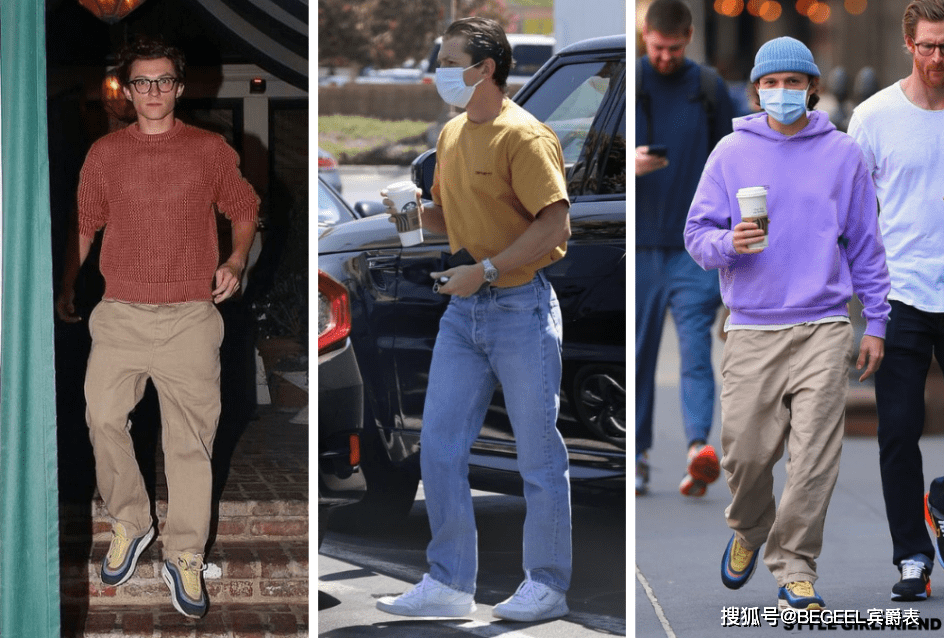

<summary>第3份搜索结果 [源自google搜索] (爱戴名表被喷配不上赞达亚,荷兰弟曝近照气质大变,2):</summary>

|

||||

<div class="search_result">https://www.sohu.com/a/580380519_120702487</div>

|

||||

<div class="search_result">Title: 爱戴名表被喷配不上赞达亚,荷兰弟曝近照气质大变,26岁资产惊人_蜘蛛侠_手表_罗伯特·唐尼

|

||||

|

||||

URL Source: https://www.sohu.com/a/580380519_120702487

|

||||

|

||||

Markdown Content:

|

||||

2022-08-27 19:00 来源: [BEGEEL宾爵表](https://www.sohu.com/a/580380519_120702487?spm=smpc.content-abroad.content.1.1739375950559fBhgNpP)

|

||||

|

||||

发布于:广东省

|

||||

|

||||

近日,大家熟悉的荷兰弟,也就演漫威超级英雄“蜘蛛侠”而走红的英国男星汤姆·赫兰德(Tom Holland),最近在没有任何预警的情况下宣布自己暂停使用社交媒体,原因网络暴力已经严重影响到他的心理健康了。虽然自出演蜘蛛侠以来,对荷兰弟的骂声就没停过,但不可否认他确实是一位才貌双全的好演员,同时也是一位拥有高雅品味的地道英伦绅士,从他近年名表收藏的趋势也能略知一二。

|

||||

|

||||

|

||||

|

||||

2016年,《美国队长3:内战》上映,汤姆·赫兰德扮演的“史上最嫩”蜘蛛侠也正式登场。这个美国普通学生,由于意外被一只受过放射性感染的蜘蛛咬到,并因此获得超能力,化身邻居英雄蜘蛛侠警恶惩奸。和蜘蛛侠彼得·帕克一样,当时年仅20岁的荷兰弟无论戏里戏外的穿搭都是少年感十足,走的阳光邻家大男孩路线,手上戴的最多的就是来自卡西欧的电子表,还有来自Nixon sentry的手表,千元级别甚至是百元级。

|

||||

|

||||

20岁的荷兰弟走的是邻家大男孩路线

|

||||

|

||||

|

||||

|

||||

随着荷兰弟主演的《蜘蛛侠:英雄归来》上演,第三代蜘蛛侠的话痨性格和年轻活力的形象瞬间圈粉无数。荷兰弟的知名度和演艺收入都大幅度增长,他的穿衣品味也渐渐从稚嫩少年风转变成轻熟绅士风。从简单的T恤短袖搭配牛仔裤,开始向更加丰富的造型发展,其中变化最明显的就是他手腕上的表。

|

||||

|

||||

荷兰弟的衣品日......</div>

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>第4份搜索结果 [源自google搜索] (荷兰弟居然要休息一年,因演戏演到精神分裂…):</summary>

|

||||

<div class="search_result">https://www.sohu.com/a/683718058_544020</div>

|

||||

<div class="search_result">Title: 荷兰弟居然要休息一年,因演戏演到精神分裂…_Holland_Tom_工作

|

||||

|

||||

URL Source: https://www.sohu.com/a/683718058_544020

|

||||

|

||||

Markdown Content:

|

||||

荷兰弟居然要休息一年,因演戏演到精神分裂…\_Holland\_Tom\_工作

|

||||

===============

|

||||

|

||||

* [](http://www.sohu.com/?spm=smpc.content-abroad.nav.1.1739375954055TcEvWsY)

|

||||

* [新闻](http://news.sohu.com/?spm=smpc.content-abroad.nav.2.1739375954055TcEvWsY)

|

||||

* [体育](http://sports.sohu.com/?spm=smpc.content-abroad.nav.3.1739375954055TcEvWsY)

|

||||

* [汽车](http://auto.sohu.com/?spm=smpc.content-abroad.nav.4.1739375954055TcEvWsY)

|

||||

* [房产](http://www.focus.cn/?spm=smpc.content-abroad.nav.5.1739375954055TcEvWsY)

|

||||

* [旅游](http://travel.sohu.com/?spm=smpc.content-abroad.nav.6.1739375954055TcEvWsY)

|

||||

* [教育](http://learning.sohu.com/?spm=smpc.content-abroad.nav.7.1739375954055TcEvWsY)

|

||||

* [时尚](http://fashion.sohu.com/?spm=smpc.content-abroad.nav.8.1739375954055TcEvWsY)

|

||||

* [科技](http://it.sohu.com/?spm=smpc.content-abroad.nav.9.1739375954055TcEvWsY)

|

||||

* [财经](http://business.sohu.com/?spm=smpc.content-abroad.nav.10.17393759......</div>

|

||||

</details>

|

||||

|

||||

"""

|

||||

def validate_path():

|

||||

|

||||

@@ -48,8 +48,6 @@ if __name__ == "__main__":

|

||||

|

||||

# plugin_test(plugin='crazy_functions.下载arxiv论文翻译摘要->下载arxiv论文并翻译摘要', main_input="1812.10695")

|

||||

|

||||

# plugin_test(plugin='crazy_functions.联网的ChatGPT->连接网络回答问题', main_input="谁是应急食品?")

|

||||

|

||||

# plugin_test(plugin='crazy_functions.解析JupyterNotebook->解析ipynb文件', main_input="crazy_functions/test_samples")

|

||||

|

||||

# plugin_test(plugin='crazy_functions.数学动画生成manim->动画生成', main_input="A ball split into 2, and then split into 4, and finally split into 8.")

|

||||

|

||||

@@ -48,8 +48,6 @@ if __name__ == "__main__":

|

||||

|

||||

# plugin_test(plugin='crazy_functions.下载arxiv论文翻译摘要->下载arxiv论文并翻译摘要', main_input="1812.10695")

|

||||

|

||||

# plugin_test(plugin='crazy_functions.联网的ChatGPT->连接网络回答问题', main_input="谁是应急食品?")

|

||||

|

||||

# plugin_test(plugin='crazy_functions.解析JupyterNotebook->解析ipynb文件', main_input="crazy_functions/test_samples")

|

||||

|

||||

# plugin_test(plugin='crazy_functions.数学动画生成manim->动画生成', main_input="A ball split into 2, and then split into 4, and finally split into 8.")

|

||||

|

||||

@@ -311,3 +311,24 @@

|

||||

backdrop-filter: blur(10px);

|

||||

background-color: rgba(var(--block-background-fill), 0.5);

|

||||

}

|

||||

|

||||

|

||||

.reasoning_process {

|

||||

font-size: smaller;

|

||||

font-style: italic;

|

||||

margin: 0px;

|

||||

padding: 1em;

|

||||

line-height: 1.5;

|

||||

text-wrap: wrap;

|

||||

opacity: 0.8;

|

||||

}

|

||||

|

||||

.search_result {

|

||||

font-size: smaller;

|

||||

font-style: italic;

|

||||

margin: 0px;

|

||||

padding: 1em;

|

||||

line-height: 1.5;

|

||||

text-wrap: wrap;

|

||||

opacity: 0.8;

|

||||

}

|

||||

|

||||

在新工单中引用

屏蔽一个用户